You Have 5000 Days: Navigating the End of Work As We Know It. Part 10: Everyone Is Doing It*.

The human adaptation to technological upheaval over the “5000 days” horizon, spanning from late 2025 to an envisioned renaissance around 2039 serves as both a chronometer and a crucible. This period, I have dubbed the Interregnum, encapsulates the turbulent transition from labor-defined existence to one of liberated potential, where AI reshapes not just economies but the very fabric of identity and purpose. As we delve into Part 10, it’s imperative to contextualize this moment within broader historical precedents: epochs like the Agricultural Revolution, which deskilled hunter-gatherer instincts while reskilling agrarian societies, or the Industrial Revolution, which mechanized craftsmanship yet birthed modern innovation.

* What are they doing? Using AI but afraid to say so.

Picture the scene: You’re immersed in your workflow, drafting an email, debugging code, or outlining a Q3 strategy document. A dedicated tab hums with potential: perhaps Grok generating ideas or Claude refining your code. You prompt, receive, copy, paste, and tweak to infuse your personal voice. Then, footsteps approach. Your boss looms, a colleague passes by. Instinct kicks in: Alt-Tab, minimize, or switch windows in a reflexive blur. It’s the adult equivalent of a 1990s teen hiding video games from parents, except now we’re professionals concealing the most potent cognitive tool in human history. This “Alt-Tab reflex” encapsulates our strange limbo: We wield AI for unprecedented productivity gains—gains that border on science fiction from just five years ago, yet the cultural narrative lags, branding its use as cheating or laziness. Beneath that lies a darker dread: Admitting AI’s role feels like confessing our own redundancy. “If the AI wrote 80% of this report,” the fear whispers, “why would they pay YOU more than its $20 monthly subscription?”

You see it all over the Internet “AI SLOP!”, “LOOK Em-Dashes, It is AI!”, “They didn’t write that, it was AI”. The force pushing back on AI is deserved from some points of view. Clearly with image and video AI the novelty fatigue is a very real issue, we have already become numb and no longer seek the same level of novelty because we are haunted with “Is it AI or Real?”. With text the bloat of text does somtimes seem like word vomit. Writers, authores and journalists have reason to be espcially indignant about AI text. It dilutes their job. However this attitude has hitched a ride on all AI results and we will face this head on, becuse it is not going away, just like the spreadsheet and spelling check.

These shifts, much like our current one, were marked by resistance, grief, and eventual mastery, underscoring that progress is not linear but dialectical, a Hegelian synthesis of thesis (traditional work) and antithesis (automation). Nuances abound: While the pace of AI acceleration outstrips past revolutions exponentially (per Moore’s Law analogs in compute), implications include amplified inequalities in access, where urban elites surge ahead while rural or developing regions lag, potentially exacerbating global divides. Edge cases, such as gig workers in precarious positions, highlight vulnerabilities, yet also opportunities for hybrid human-AI models that preserve agency.

This article is sponsored by Read Multiplex Members who subscribe here to support my work:

Link: https://readmultiplex.com/join-us-become-a-member/

It is also sponsored by many who have donated a “Cup of Coffee”. If you like this, help support my work:

Listen to the companion Podcast: https://rss.com/podcasts/readmultiplex-com-podcast/2531683

Psychologically, this Interregnum evokes a collective hero’s odyssey, where the monomyth’s archetypes mirror our internal and societal struggles. Joseph Campbell’s framework, with its cycles of departure, initiation, and return, resonates deeply as we confront the abyss of obsolescence. From an evolutionary standpoint, our brains, wired for scarcity and status through labor (as per Dunbar’s social brain hypothesis), rebel against abundance, manifesting in phenomena like “automation anxiety,” a term coined in recent studies linking job displacement to spikes in depression and identity crises. Yet, philosophical lenses offer solace: Stoicism, via Epictetus, reminds us that control lies not in external tools but in our responses to them; existentialism, through Camus, frames absurdity in work’s end as a call to rebellious creation. Considerations extend to cultural variations, collectivist societies will prioritize communal reskilling, while individualist ones emphasize personal mastery, implying tailored strategies for global audiences. As we approach the recap of our journey thus far, these threads weave a tapestry of caution and empowerment: The pressure to adopt AI, if unexamined, risks alienation, but when harnessed volitionally, it propels us toward transcendence.

Building on this foundation, the Hero’s Journey arc we’ve traced across prior installments gains sharper relief. It transforms abstract disruptions into personal quests, where each part represents milestones in awakening, confrontation, and integration. With the Interregnum as our liminal space—chaotic yet fertile—we must navigate not just technological adoption but its psychological undercurrents, setting the stage for a comprehensive review of the ground covered. This lead-in underscores the essay’s core paradox: External imperatives can stifle internal growth, yet awareness of this dynamic equips us to master AI as allies in destiny’s forge.

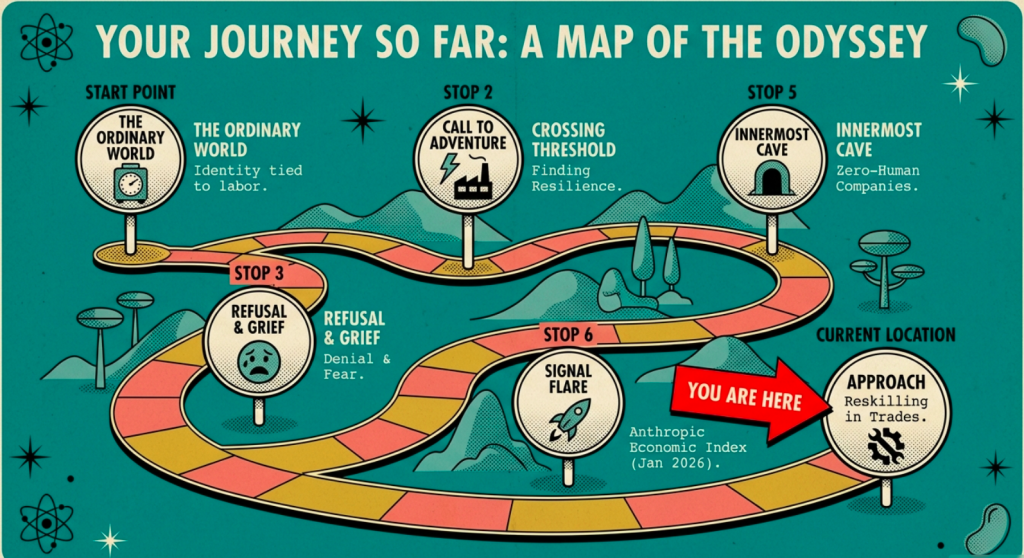

As we explore Part 10 of this series, it’s essential to pause and reflect on the ground we’ve covered, framing our collective narrative within Joseph Campbell’s monomyth, the Hero’s Journey. This archetypal structure has served as our guiding arc, transforming the seismic shifts brought by AI and automation from mere technological disruptions into a profound quest for human reinvention. The “5000 days” (approximately 13.7 years from our starting point around late 2025) symbolize not an apocalyptic countdown but a transformative timeline: a call to adventure in which we leave the Ordinary World of traditional labor behind, confront trials, and return with the elixir of abundance, purpose, and self-mastery.

In Part 1 (published December 24, 2025, at https://readmultiplex.com/2025/12/24/you-have-5000-days-how-to-navigate-the-end-of-work-as-we-know-it-part-1), we established the Ordinary World: the familiar yet exhausting realm of 9-to-5 jobs, where work defines identity, status, and survival. Drawing on historical roots from evolutionary psychology—women’s nurturing roles tied to lunar cycles and communal stability, men’s hunting for surplus—to the Industrial Revolution’s productivity tether, we explored how attachment to labor has shaped human self-worth. This set the stage for the Call to Adventure, the inexorable rise of AI signaling the end of work as we know it, with timelines projecting abundance by mid-2036 to mid-2041, while acknowledging the Refusal of the Call through denial and fear of obsolescence. Psychological insights from Julian Jaynes’s The Origin of Consciousness in the Breakdown of the Bicameral Mind and Joseph Chilton Pearce’s Magical Child highlighted consciousness evolution and innate wonder stifled by modern systems. Actionable plans included inner healing with Teal Swan’s The Completion Process and John Bradshaw’s Homecoming, experimenting with provisional selves via Herminia Ibarra’s Working Identity and Bill Burnett/Dave Evans’s Designing Your Life, and Stoic reflections from Marcus Aurelius’s Meditations.

Part 2 (published December 31, 2025, at https://readmultiplex.com/2025/12/31/you-have-5000-days-how-to-navigate-the-end-of-work-as-we-know-it-part-2) deepened this by examining grief processes amid job obsolescence, adapting Elisabeth Kübler-Ross’s five stages (denial, anger, bargaining, depression, acceptance) from On Death and Dying and On Grief and Grieving. We tied this to Carl Sagan-inspired optimism for post-scarcity societies, contrasting with zero-sum struggles, and invoked Viktor Frankl’s Man’s Search for Meaning, Albert Camus’s The Myth of Sisyphus, and Daniel Pink’s Drive for intrinsic value. Framing grief as early Tests and Allies in the journey, actionable advice included vulnerability audits for AI risks, financial buffers, skill diversification via MOOCs, networking, and forming “Abundance Transition Groups” for shared navigation.

In Part 3 (published January 1, 2026, at https://readmultiplex.com/2026/01/01/you-have-5000-days-navigating-the-end-of-work-as-we-know-it-part-3-the-player-piano), we built on Kurt Vonnegut’s Player Piano as a dystopian mentor, portraying mechanized society’s divides and dehumanization, drawing from Vonnegut’s GE experiences and influences like Huxley’s Brave New World. Delving into the psychological toll of automation, it represented Crossing the Threshold, urging proactive adaptation via vulnerability audits, emergency funds, MOOCs, and life simulations to avoid reactive trauma, while tying grief stages to the journey’s Road of Trials.

Parts 4 and 5 expanded toward book-length exploration, with Part 4 (published January 19, 2026, at https://readmultiplex.com/2026/01/19/you-have-5000-days-navigating-the-end-of-work-as-we-know-it-part-4-reframing-the-dawn-of-abundance) focusing on reframing via Scott Adams’s Reframe Your Brain, How to Fail at Almost Everything, Win Bigly, and Loserthink, outlining 160+ maneuvers rooted in neuroplasticity and hypnosis. Part 5 (published January 20, 2026, at https://readmultiplex.com/2026/01/20/you-have-5000-days-navigating-the-end-of-work-as-we-know-it-part-5-your-deskilling) examined deskilling through Anthropic’s Economic Index, highlighting 19-hour human-AI feedback loops, with insights from Mihaly Csikszentmihalyi’s flow and Adams’s reframing. The narrative arc here approached the Innermost Cave, confronting the “dark night of the soul” triggered by Zero-Human Companies, autonomous AI entities scaling across industries and balancing de-skilling with reskilling in conduction.

Part 6 (published January 27, 2026, at https://readmultiplex.com/2026/01/27/you-have-5000-days-navigating-the-end-of-work-as-we-know-it-part-6-the-dark-night-of-the-soul) recapped the series while exploring the Ordeal’s “Dark Night of the Soul,” introducing the Zero-Human Company experiment with tools like Grok and Claude, resurrecting “dumpster data” for innovation. It invoked Frankl, Camus, Jaynes, Pearce, and Pink for resilience, highlighting AI’s role in displacing professions while unlocking abundance.

Part 7 (published January 30, 2026, at https://readmultiplex.com/2026/01/30/you-have-5000-days-navigating-the-end-of-work-as-we-know-it-part-7-consider-phlebas) integrated Iain M. Banks’s Consider Phlebas and the Culture series as mentors for the Reward, portraying post-scarcity utopias with AI Minds enabling freedom, critiqued through Banks’s socialist lens. It mapped the monomyth’s stages, emphasizing actionable tools like talent stacking and mindset reframes.

Part 8 (published February 2, 2026, at https://readmultiplex.com/2026/02/02/you-have-5000-days-navigating-the-end-of-work-as-we-know-it-part-8-saving-your-wisdom) provided a comprehensive recap, reinforcing the monomyth’s progression to the Road Back. It addressed wisdom preservation via SaveWisdom.org’s 1000 questions, drawing on narrative therapy from Michael White and David Epston’s Narrative Means to Therapeutic Ends, and Teilhard de Chardin’s Noosphere.

Finally, Part 9 (published February 4, 2026, at https://readmultiplex.com/2026/02/04/you-have-5000-days-navigating-the-end-of-work-as-we-know-it-part-9-the-artisans-awakening) shifted focus to reskilling in blue-collar trades as fulfilling alternatives resistant to full automation, per Moravec’s Paradox, which underscores humans’ superiority in sensorimotor tasks. We listed top 22 vocations, statistics on surging demand (e.g., 400,000+ U.S. trade jobs unfilled in 2025), and nuances like these roles evolving into hobbies in advanced robotic eras. This part embodied the Approach to the Innermost Cave, balancing de-skilling with empowering reskilling.

Across these parts, the Hero’s Journey arc persists: We’ve refused the call through grief and fear, met mentors in literature and tools like SaveWisdom.org, faced tests in economic reports and Zero-Human tech, and glimpsed rewards in abundance. Yet, the journey is far from complete; the Road Back demands mastery over tools like AI, lest we falter in the Interregnum, the chaotic transitional epoch between old labor paradigms and emergent ones.

Edge cases abound: What of those in remote areas with limited AI access? Or cultural contexts where work equates to spiritual duty? These considerations underscore the journey’s universality while highlighting individual variations. As we enter Part 10, we confront a subtle Ordeal: the psychological pressure to adopt AI, which paradoxically impedes true integration.

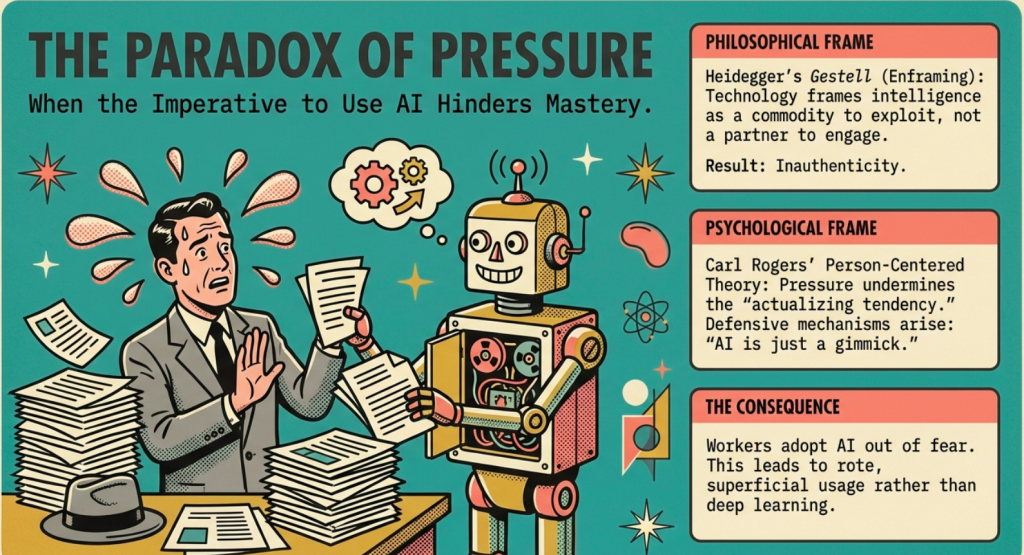

The Paradox of Pressure: How the Imperative to Use AI Can Hinder Its Mastery

In the grand tapestry of human evolution, technological tools have always been double-edged swords: liberators of potential and disruptors of equilibrium. As we navigate the Interregnum, the liminal space between the fading era of human-centric labor and the dawning age of AI-augmented existence, the pressure to incorporate artificial intelligence into our work emerges as a profound impediment to its genuine adoption.

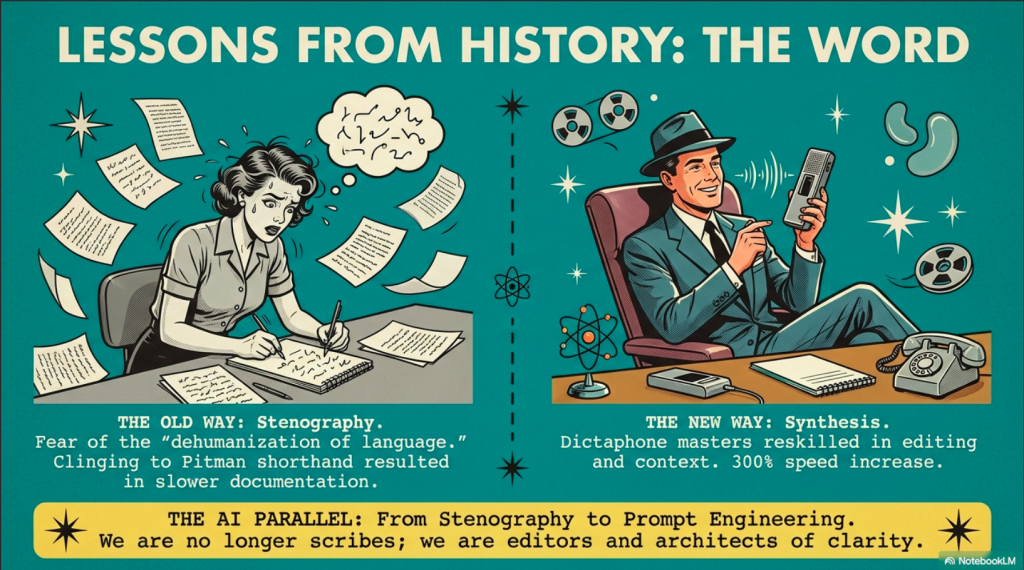

I delves philosophically and psychologically into this paradox, exploring how external mandates and societal urgings foster resistance, superficial engagement, and existential dissonance, ultimately delaying mastery. Drawing analogies from historical technological shifts, the calculator in mathematics, spreadsheets in finance, stenography to Dictaphone in transcription, and dictionaries to spell-checking in writing, we uncover patterns where resistance to new tools not only perpetuates obsolescence but exacerbates the gap in the 5000-day timeline. Mastery, we argue, demands not coerced compliance but volitional integration, transforming potential victims into architects of destiny, even amid de-skilling and requisite re-skilling.

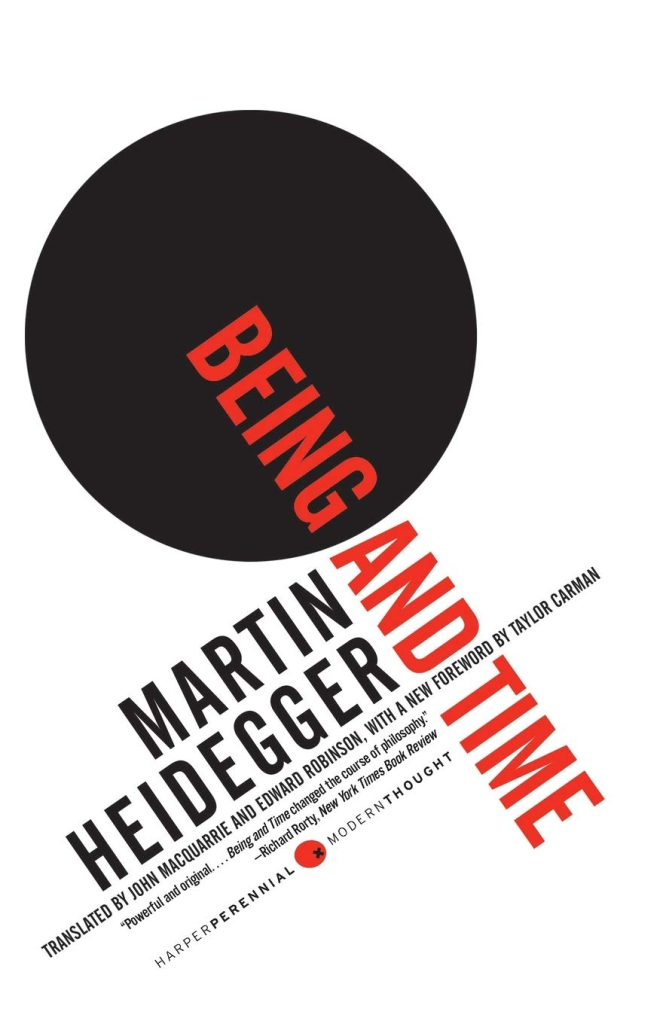

Philosophically, this pressure echoes Philosopher Martin Heidegger’s concept of Gestell (enframing), where technology reduces the world to a standing-reserve of resources, alienating us from authentic Being. When AI is thrust upon us as an imperative, via corporate policies, economic necessities, or cultural narratives, it frames intelligence as a commodity to exploit rather than a partner to engage. This enframing breeds inauthenticity: workers adopt AI not out of curiosity or necessity but fear of irrelevance, leading to rote usage that stifles creativity. And becuse you fell like it could perhaps do your job better (in a privet honest monent), it feels like you are a fraud or you are cheating using AI. Shame is abound at scale in this moment.

Martin Heidegger, born in 1889 in Messkirch, Germany, emerged as one of the 20th century’s most influential philosophers, profoundly shaping existential phenomenology. Raised in a conservative Catholic family in the Black Forest region, Heidegger’s early life was steeped in rural traditions and religious inquiry, which contrasted sharply with the rapid industrialization of Europe. He initially studied theology at the University of Freiburg, intending to become a Jesuit priest, but shifted to philosophy under the influence of Edmund Husserl, the founder of phenomenology. This pivot marked the beginning of Heidegger’s quest to uncover the fundamental structures of existence, moving away from abstract metaphysics toward a concrete analysis of human being-in-the-world. His experiences during World War I, where he served briefly, exposed him to the mechanized horrors of modern warfare, planting seeds of skepticism toward technological progress that would later blossom in his critiques.

Heidegger’s seminal work, Being and Time (1927) (https://amzn.to/46rvA1D), laid the groundwork for his later ideas on technology. In this text, he introduced Dasein—the human way of being—as inherently temporal and relational, emphasizing care (Sorge) as the essence of existence. Influenced by Aristotle, Kierkegaard, and Nietzsche, Heidegger critiqued the Cartesian subject-object divide, arguing that humans are not detached observers but embedded in a world of practical engagements. The post-war Weimar Republic’s cultural upheaval, marked by economic instability and existential angst, fueled his exploration of authenticity versus inauthenticity. By the 1930s, amid rising Nazism—which Heidegger controversially supported briefly as rector of Freiburg University—his thought evolved to question how modernity’s rationalism obscured the primordial question of Being (Sein), setting the stage for his turn (Kehre) toward poetry, language, and technology as modes of revealing.

The concept of Gestell crystallized in Heidegger’s later essays, particularly “The Question Concerning Technology” (1954) (https://amzn.to/4qlwT9u), amid the Cold War’s technological arms race and post-WWII reconstruction. Drawing from his earlier phenomenology, Heidegger viewed technology not as neutral tools but as a mode of revealing (aletheia) that challenges-forth (herausfordern) nature into resources. His rural upbringing and affinity for Holderlin’s poetry informed this: the Black Forest paths symbolized a meditative dwelling (wohnen) in contrast to industrial exploitation. Personal crises, including his denazification trials and retreat to a mountain hut in Todtnauberg, intensified his reflections on how modern science and technology enframed the world, reducing rivers to hydroelectric power or forests to lumber reserves. This evolution reflected a broader shift from anthropocentric existentialism to a more ontological poetics, where technology’s essence lay in its totalizing demand for efficiency.

Heidegger’s path to these ideas was not linear but dialectical, mirroring the Hegelian influences he critiqued. Early mentors like Husserl provided the phenomenological method—bracketing assumptions to describe lived experience—but Heidegger radicalized it by historicizing Being. The interwar period’s intellectual ferment, including dialogues with Hannah Arendt and Karl Jaspers, honed his views on thrownness (Geworfenheit) into a technological age. Post-1945, disillusioned by atomic bombs and mass media, he articulated Gestell as the supreme danger, yet also a saving power if questioned poetically. Nuances include his ambiguous politics: while his Nazi ties taint his legacy, they underscore how ideology can enframe thought, paralleling today’s AI-driven surveillance states. Edge cases, like his rejection of cybernetics, highlight resistance to reductive models of human cognition.

Understanding Heidegger’s history is vital because it contextualizes Gestell beyond abstraction, revealing it as a response to modernity’s crises—crises echoed in our AI Interregnum. His biography illuminates how personal and historical traumas shaped a philosophy warning against technology’s alienating grip, urging us to reclaim authentic engagement. Without this, we risk superficial application, missing how enframing perpetuates inauthenticity in coerced AI adoption. Implications extend to ethics: Heidegger’s flaws remind us to critically interrogate philosophies, avoiding blind endorsement. In the 5000 days, this understanding fosters volitional mastery, transforming pressure into poetic dwelling.

Critically, grasping Heidegger’s development equips us to navigate AI’s paradoxes with depth, recognizing that resistance stems not from luddism but from a primal fear of lost Being. His ideas, born from existential upheavals, offer a lens for reskilling: not mere technical proficiency, but a reattunement to the world. Edge cases, such as AI in art or therapy, demand this insight to prevent enframing creativity into data points. Ultimately, Heidegger’s vital legacy lies in provoking the question of technology’s essence, ensuring our Hero’s Journey yields not domination but harmonious revelation in abundance.

Psychologically, drawing from Carl Rogers’ person-centered theory (https://amzn.to/4qjuyfr), such pressure undermines the “actualizing tendency”—our innate drive toward growth—by imposing incongruence between one’s self-concept (e.g., “I am a skilled artisan”) and the organismic experience (e.g., “AI outperforms me”). The result? Defensive mechanisms like rationalization (“AI is just a gimmick”) or overcompensation (frenetic, ineffective experimentation), both impeding deep learning. Nuances arise in individual differences: Introverts may withdraw further under pressure, per Eysenck’s arousal theory, while extroverts might superficially “perform” adoption without internalization. Implications extend to societal levels; widespread resentment could fuel Luddite backlashes, slowing collective progress in the Interregnum.

Carl Rogers, born in 1902 in Oak Park, Illinois, was a pioneering American psychologist who revolutionized psychotherapy with his humanistic approach. Growing up in a strict Protestant family on a farm, Rogers experienced a sheltered childhood marked by religious rigor and intellectual curiosity, which initially led him to study agriculture at the University of Wisconsin. However, a trip to China for a Christian conference in 1922 exposed him to diverse worldviews, sparking a shift away from theology toward psychology. He pursued graduate studies at Union Theological Seminary and then Teachers College, Columbia University, under influences like John Dewey’s pragmatism and Otto Rank’s relational therapy. Early clinical work with delinquent children at the Society for the Prevention of Cruelty to Children in Rochester, New York, during the Great Depression, revealed the limitations of directive Freudian methods, prompting Rogers to emphasize empathy and client autonomy over expert authority.

Rogers’s core ideas coalesced in the 1940s and 1950s, amid post-WWII optimism and the rise of existential-humanistic psychology. His seminal Client-Centered Therapy (1951) (https://amzn.to/4a58Cji) introduced the “actualizing tendency”—an innate motivational force toward growth and fulfillment—rooted in his observations that individuals thrive when provided a nurturing environment free from judgment. Influenced by Abraham Maslow’s hierarchy of needs and Kurt Goldstein’s organismic theory, Rogers critiqued behaviorism’s mechanistic view of humans, arguing for a holistic perspective where self-concept (one’s perceived identity) aligns with organismic experience (innate feelings and potentials). His time at the University of Wisconsin-Madison and later the Western Behavioral Sciences Institute in La Jolla, California, during the countercultural 1960s, further refined these concepts through encounter groups, emphasizing congruence, unconditional positive regard, and empathic understanding as conditions for change.

The person-centered theory fully matured in works like On Becoming a Person (1961), responding to the era’s social upheavals, Cold War anxieties, civil rights movements, and questioning of authority. Rogers’s own struggles with depression in the 1950s, treated through his methods, underscored the theory’s personal relevance. He drew from phenomenology, viewing therapy as facilitating self-actualization rather than curing pathology, a radical departure from psychoanalysis. Global travels, including peace-building efforts in conflicted regions like Northern Ireland and South Africa in the 1970s-1980s, applied his ideas beyond clinics to education and conflict resolution, highlighting human potential amid societal pressures.

Rogers’s journey to these ideas was iterative, blending empirical research with personal evolution. Early mentors like Leta Hollingworth at Columbia fostered his empirical rigor, while disillusionment with rigid diagnostics during WWII counseling for veterans radicalized his client-led approach. The humanistic “third force” psychology, co-founded with Maslow against psychoanalysis and behaviorism, reflected mid-century cultural shifts toward individualism. Nuances include criticisms of his optimism as naive, yet his empirical studies (e.g., Wisconsin counseling research) validated core tenets. Edge cases, like applying theory to non-verbal clients or cross-culturally, reveal adaptations, paralleling AI’s diverse user contexts.

Understanding Rogers’s history is vital as it grounds person-centered theory in real-world responses to authoritarian structures—echoed in AI’s coercive adoption. His biography shows how personal and societal crises birthed a philosophy of intrinsic growth, warning against external impositions that create incongruence. Without this, we overlook how pressure erodes the actualizing tendency, leading to superficial AI use. Implications for ethics: Rogers’s emphasis on empathy urges humane AI integration. In the 5000 days, this fosters volitional reskilling, turning impediments into self-directed mastery.

Critically, grasping Rogers’s development empowers navigation of AI’s psychological paradoxes, viewing resistance as a defense of self-concept rather than obstinacy. His ideas, forged in humanistic rebellion, provide a lens for reattunement: not forced compliance, but congruent growth. Edge cases, like AI in mental health, demand this insight to avoid dehumanizing therapy. Ultimately, Rogers’s legacy provokes questioning external mandates, ensuring the Hero’s Journey yields authentic fulfillment in abundance.

New Tools, Phronesis And The Imposter Syndrome

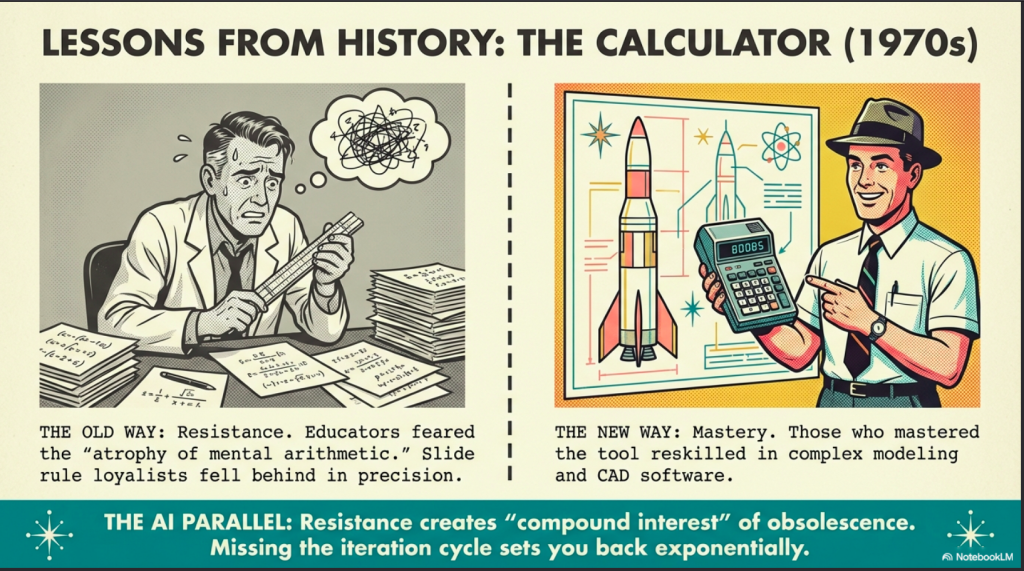

Consider the calculator’s advent in the 1970s, a pivotal analogy for AI’s computational augmentation. Philosophically, it challenged Plato’s ideal of mathematics as pure dialectic, reducing eternal forms to button-presses and risking the atrophy of mental arithmetic—a deskilling that freed minds for higher abstraction yet sparked resistance. Psychologically, educators and professionals initially viewed it as a crutch, fearing it would erode cognitive discipline; studies from the era (e.g., National Council of Teachers of Mathematics reports) documented anxiety akin to learned helplessness, where pressure from curricula mandates led to superficial reliance rather than integrated use.

Dive deeper: In engineering, early adopters who resisted (clinging to slide rules) fell behind in precision tasks, exemplifying how refusal during the “calculator interregnum” (the 1970s–1980s transition) extended obsolescence. Those mastering it, however, reskilled in complex modeling, accelerating innovations like CAD software. Edge cases: In resource-poor regions, calculator scarcity amplified inequality; today, AI access gaps mirror this. Resistance here sets one further behind in the 5000 days, as unmastered tools compound exponential tech growth—missing the “compound interest” of iterative AI proficiency.

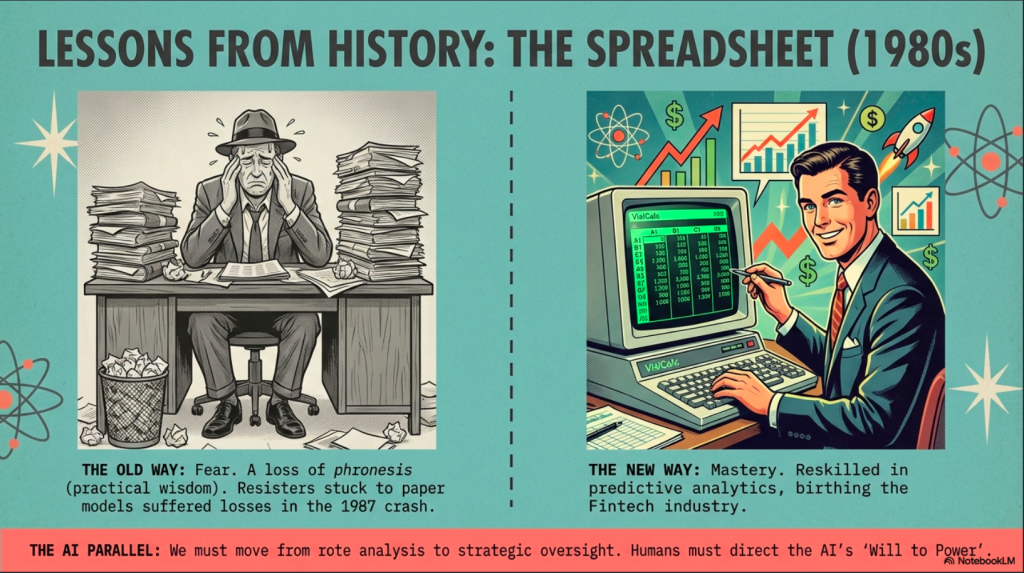

Similarly, the spreadsheet’s rise in finance, epitomized by VisiCalc (1979) and Lotus 1-2-3 (1983), parallels AI’s data-handling prowess. Philosophically, it embodied Nietzsche’s “will to power” through quantification, empowering analysts to simulate scenarios but deskilling manual ledger-keeping, which some saw as eroding financial intuition—a loss of phronesis (practical wisdom). Psychologically, the pressure from Wall Street’s competitive culture induced imposter syndrome; a 1980s Harvard Business Review analysis noted bankers resisting spreadsheets due to fear of errors in untrusted “black boxes,” leading to hybrid workflows that halved efficiency.

Deep dive: Resisters, like those sticking to paper models during the 1987 crash, suffered greater losses from delayed insights, while masters reskilled in predictive analytics, birthing fintech. In the Interregnum analogy, AI agents demand similar reskilling: from rote analysis to strategic oversight. Resistance, fueled by pressure, victimizes users, turning them into passive overseers; mastery controls destiny, as in algorithmic trading where humans direct AI’s “will.” Implications: Over-reliance without understanding risks bubbles, as in 2008; edge cases include ethical dilemmas, like AI-biased lending, requiring philosophical vigilance.

The transition from stenography to the Dictaphone in transcription offers a tactile analogy, highlighting AI’s voice-to-text evolution. Introduced in the early 1900s by Thomas Edison, the Dictaphone promised efficiency but met philosophical resistance as a dehumanizer of language—echoing Walter Ong’s oral-literate divide, where shorthand’s rhythmic art yielded to mechanical reproduction, deskilling kinesthetic memory. Psychologically, secretaries and court reporters experienced identity crises; a 1920s study in The Journal of Applied Psychology revealed heightened stress from mandated adoption, manifesting as transcription errors from resentment-driven haste.

Dive deep: Resisters, clinging to Pitman shorthand during the interwar period, were outpaced in legal and medical fields, where Dictaphone masters reskilled in editing and context—accelerating documentation speed by 300%. In our 5000 days, AI transcription tools like Whisper AI demand analogous mastery: not victimhood to inaccuracies but reskilling in prompt engineering for nuance capture. Pressure impedes this, fostering superficial dictation; control averts destiny’s derailment, even in de-skilled rote listening.

Finally, dictionaries to spell-checking software (e.g., WordStar’s 1979 checker, evolving to Grammarly) analogizes AI’s linguistic aids. Philosophically, it questions Locke’s tabula rasa—does automated correction erode etymological depth, turning writers into passive vessels? Psychologically, pressure in education (e.g., 1980s mandates) triggered reactance theory responses, per Brehm: students rebelled by over-relying or ignoring tools, stunting vocabulary growth.

Deep dive: Resisters in publishing, eschewing early checkers, lagged in productivity during the digital shift, while masters reskilled in stylistic refinement, birthing content explosion. Edge cases: Non-native speakers benefit disproportionately, but cultural biases in AI (e.g., favoring American English) demand critical reskilling. In the Interregnum, resistance to AI writing assistants sets one back exponentially, as unmastered tools amplify competitors’ edges.

The fear is real for the new technological “Don’t ask, don’t tell” quigmire. So many will devlop the Imposter syndrome as we use tools to take away from the manual work we did in prior eras. The Imposter syndrome was first identified in 1978 by psychologists Pauline Clance and Suzanne Imes in their study of high-achieving women, refers to a persistent internal belief that one’s success is undeserved, often attributing accomplishments to luck, timing, or deception rather than competence. It manifests as chronic self-doubt, fear of exposure as a “fraud,” and overworking to compensate, despite external evidence of ability. Rooted in cognitive distortions like perfectionism and attribution bias, it disproportionately affects underrepresented groups—women, minorities, and first-generation professionals, but is universal, with studies (e.g., Clance’s 1985 The Impostor Phenomenon) (https://amzn.to/3Mvtze2) estimating 70% of people experience it at some point. Nuances include its intersection with Dunning-Kruger effect inverses, where competent individuals undervalue skills, and cultural factors amplifying it in competitive environments like tech or finance.

In the context of AI adoption during the Interregnum, imposter syndrome intensifies under pressure to integrate tools that outperform human capabilities in specific tasks, eroding self-concept as a skilled worker. For instance, a data analyst mandated to use AI for forecasting may feel their insights are “faked” by the machine, attributing any success to the tool rather than their oversight, leading to hesitation in full mastery. This aligns with Heidegger’s enframing and Rogers’s incongruence: AI’s “black box” nature fuels doubts about genuine contribution, fostering superficial use to avoid perceived incompetence. Implications include reduced innovation, as sufferers avoid risks, and mental health strains like anxiety, per modern research (e.g., 2020s studies linking tech disruption to imposter spikes in STEM fields).

Applying this to the 5000 days timeline, imposter syndrome acts as a hidden impediment, setting individuals further behind by perpetuating resistance akin to historical tool shifts. In finance’s spreadsheet era, bankers felt fraudulent relying on VisiCalc, delaying reskilling in analytics; today, AI users might undervalue their prompt engineering or ethical oversight as “not real work,” missing opportunities for magnified abilities. Edge cases: High-achievers in creative fields like writing may experience amplified syndrome when AI assists, fearing loss of authenticity, while novices might paradoxically feel less impostery by leveraging tools as equalizers. Overcoming it requires reframing via Rogers’s actualizing tendency—fostering congruence through volitional practice and support groups.

Ultimately, addressing imposter syndrome in AI contexts demands awareness and strategies like cognitive behavioral techniques (e.g., journaling achievements) or mentorship, transforming it from a victimizing force to a catalyst for growth. In the Hero’s Journey arc, it represents an inner Ordeal, where confronting self-doubt yields mastery over tools, ensuring not just survival but thriving in abundance. Nuances: Generational differences matter, Gen Z, digital natives, may experience less syndrome with AI, while Boomers, tied to traditional skills, face more; implications extend to policy, urging workplaces to provide training that builds confidence rather than mandates. Either way we must move past the Don’t Ask, Don’t Tell Phase of AI usage.

AI Is Spell Check For Ideas

Across these analogies, a pattern emerges: Pressure begets resistance, deskilling without reskilling, and victimization in transitional epochs. Yet, the Hero’s Journey demands transcendence, the Interregnum requires mastering AI as allies, not overlords. Philosophically, this aligns with Sartre’s existential freedom: We choose our essence through tools, controlling destiny via agentic use (e.g., customizing AI agents for synthesis).

Psychologically, flow states (Csikszentmihalyi) (https://amzn.to/3NUQdgx) arise from volitional engagement, mitigating pressure’s impediments. Implications: Societal policies should foster curiosity-driven adoption; edge cases like AI addiction necessitate boundaries. In the 5000 days, mastery ensures the Return with Elixir: a world where de-skilling liberates, reskilling elevates, and humans orchestrate abundance. Resist the pressure’s paradox, and emerge not as victims, but victors.

Throughout, edge cases, such as remote access barriers or cultural work ethics, highlight the journey’s universality yet individuality. The Road Back now beckons: marshaling AI not as a threat but as an amplifier of human potential. This Ordeal demands volitional integration, transforming magnified abilities into new vocations. As heroes, we return not diminished but empowered, crafting destinies in abundance.

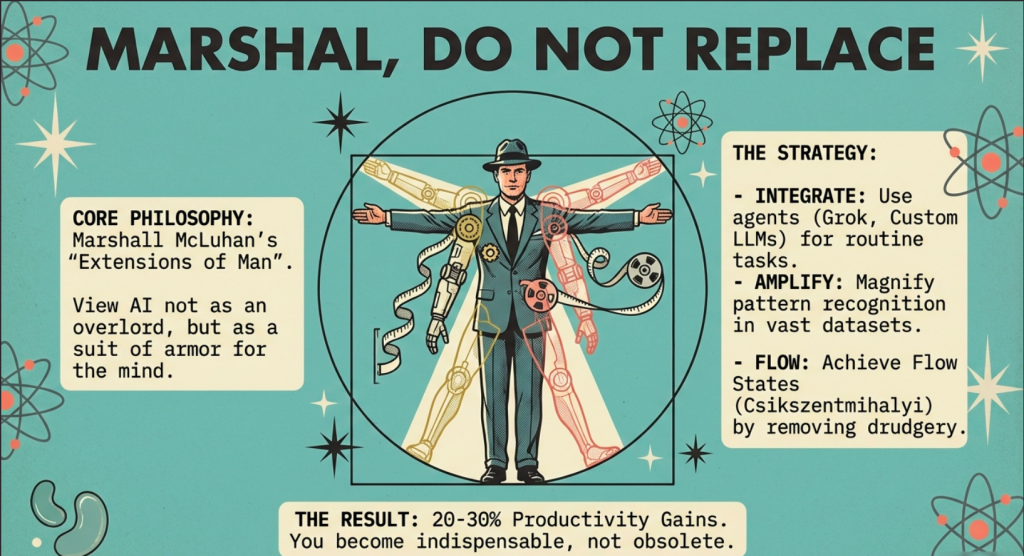

In the Interregnum of the 5000 days, marshaling AI begins with a mindset shift: viewing it as an extension of your cognitive and creative faculties rather than a replacement. Philosophically, this echoes Marshall McLuhan’s “extensions of man,” where tools amplify innate strengths, your analytical prowess, empathy, or innovation, multiplied exponentially. McLuhan famously said that all technologies are extensions of man; The wheel is an extension of the foot, Glasses are an extension of the eye, A hammer is an extension of the fist. I assert AI as an extension of the central nervous system. In your current job, integrate AI agents for routine tasks: use tools like Grok or custom LLMs to automate data analysis, generating insights that free you for strategic oversight.

For instance, a marketer might employ AI to parse consumer trends from vast datasets, magnifying pattern-recognition abilities to craft hyper-personalized campaigns. Psychologically, this fosters flow states (Csikszentmihalyi), reducing burnout while enhancing efficacy; nuances include initial learning curves, where iterative prompting hones skills, turning deskilling in rote work into reskilling in orchestration. Implications extend to performance: studies (e.g., McKinsey reports on AI augmentation) show 20-30% productivity gains, but edge cases like data privacy concerns require ethical vigilance. Ultimately, this magnification positions you as indispensable, not obsolete, in transitional roles.

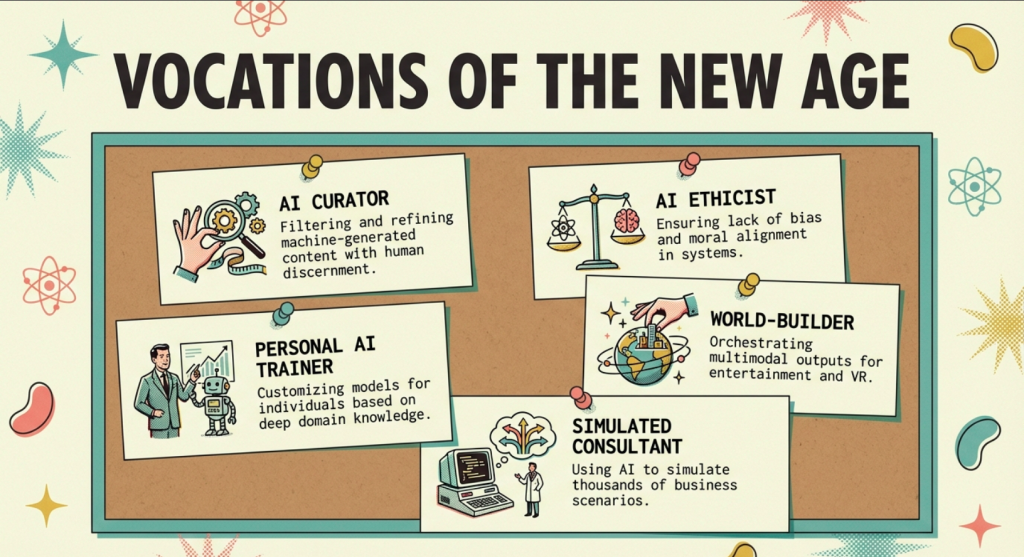

AI’s amplification unlocks a cascade of new jobs born from your best abilities, now supercharged. If your strength lies in problem-solving, AI becomes a brainstorming partner, simulating scenarios to ideate ventures like AI-assisted consulting firms. Consider a teacher whose pedagogical empathy is magnified: AI tools generate adaptive curricula, enabling the creation of personalized edtech startups that scale globally. This future-oriented marshaling demands proactive experimentation, build AI workflows via no-code platforms like Zapier or Bubble, blending human intuition with machine precision. Of course the interactive brainstorming process of vibe coding apps with AI is another aspect of this symbiosis.

Philosophically, it aligns with Sartre’s existentialism: you author your essence through tool mastery, avoiding victimization by designing jobs around amplified traits. Psychologically, self-efficacy theory (Bandura) predicts boosted confidence from early wins, but implications involve adaptation fatigue; edge cases, such as over-reliance leading to skill atrophy, necessitate balanced human-AI symbiosis. In the 5000 days, this creates a portfolio career, where one role evolves into many, each leveraging magnified abilities for economic resilience.

The Marshaling Of the Conductor

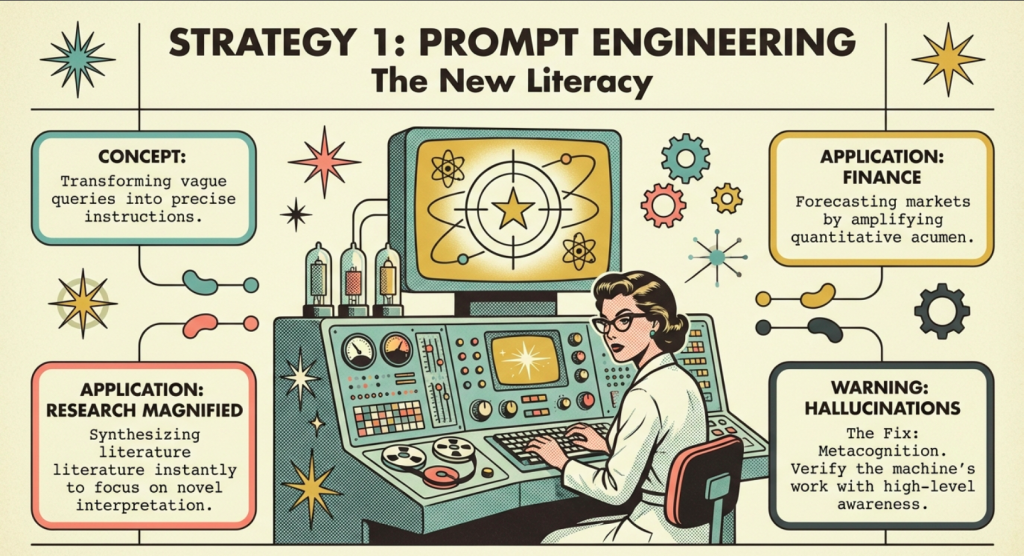

To marshal AI effectively, cultivate “prompt engineering” as a core competency, transforming vague queries into precise instructions that elicit tailored outputs. Oh yes, the “experts” say “Prompt engineering is so 2023, AI is too smart now”. Well we will see this is short sighted and misses the entier point of why we prompt AI. The outputs are based on the inputs, the question, the prompt the motif and goals.

In your current role, this magnifies research capabilities: a researcher might prompt AI to synthesize literature reviews, accelerating discoveries while you focus on novel interpretations. Examples abound in finance, where AI models forecast markets, amplifying quantitative acumen to devise innovative investment strategies. Nuances include cultural biases in AI outputs, requiring critical review to ensure accuracy; implications for future jobs involve inventing “AI curators”. roles curating machine-generated content, born from your discernment magnified. Psychologically, this builds metacognition, awareness of thinking processes enhanced by AI feedback loops. Edge cases like hallucinations in models demand verification protocols, but overall, it empowers you to pioneer fields like AI ethics auditing, where human judgment reigns supreme in an automated world.

Integration of multimodal AI handling text, images, and data, further empowers by magnifying sensory and analytical abilities. Currently, tools like Grok Imagine or Midjourney amplify visual thinkers: a designer uses them to prototype concepts rapidly, iterating designs that once took weeks. This deskills manual drafting but reskills in conceptual innovation, leading to new jobs in generative art curation or virtual reality world-building.

Philosophically, it challenges Emmanuelle Kant’s aesthetics, blending human creativity with algorithmic serendipity for emergent beauty. Psychologically, it combats creative blocks via infinite variations, fostering divergent thinking (Guilford). Implications include intellectual property shifts, where magnified abilities create hybrid human-AI copyrights; edge cases involve accessibility, as those without high-end hardware lag, underscoring the need for inclusive tools. In future vocations, this spawns “AI symphonists,” conducting and orchestrating multimodal outputs for industries like entertainment, magnifying your orchestration skills into entrepreneurial empires.

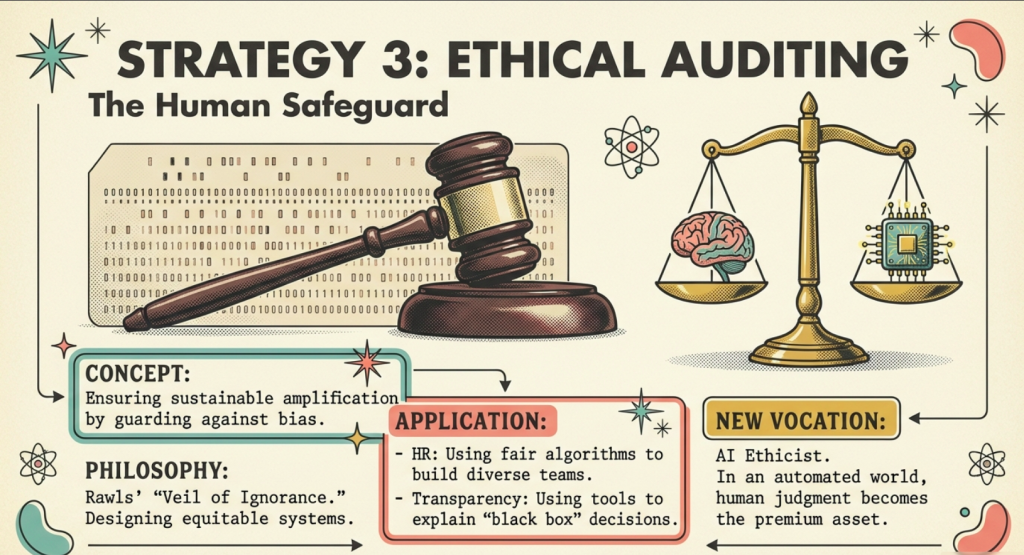

Ethical marshaling of AI ensures sustainable amplification, guarding against pitfalls like bias amplification that could undermine your power. In your job, audit AI decisions—e.g., a HR professional uses fair algorithms for hiring, magnifying impartiality to build diverse teams. This ethical lens magnifies leadership abilities, paving paths to new roles in AI governance consultancies.

Nuances: Transparency in black-box models requires tools like SHAP for explainability, turning potential opacity into strength. Philosophically, it invokes Rawls’s veil of ignorance, designing equitable systems. Psychologically, it enhances moral reasoning, per Kohlberg’s stages, but implications involve regulatory landscapes evolving in the 5000 days. Edge cases, such as AI in sensitive fields like healthcare, demand hybrid oversight, where your empathy magnified prevents dehumanization, birthing vocations like “AI ethicists” that secure long-term relevance.

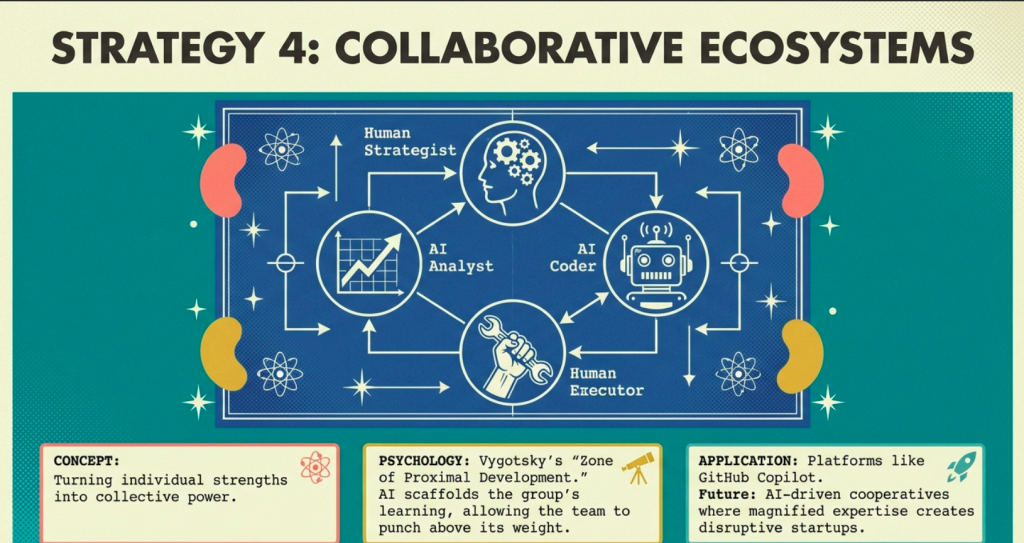

Collaborative AI ecosystems amplify social intelligence, turning individual strengths into collective power. Currently, platforms like GitHub Copilot magnify coding abilities for developers, enabling faster prototyping and team synergies. This extends to future jobs: Imagine co-founding AI-driven cooperatives where members’ magnified expertise—e.g., one in strategy, another in execution, creates disruptive startups. Psychologically, it leverages Vygotsky’s zone of proximal development, where AI scaffolds group learning. Philosophically, it echoes Habermas’s communicative action, fostering discourse over domination. Implications: Network effects accelerate innovation, but edge cases like digital divides require bridging initiatives. In the Interregnum, this marshaling evolves jobs into fluid roles, where your interpersonal skills, now AI-augmented, invent collaborative paradigms like virtual think tanks.

Own You Own AI! Say it again. Data sovereignty in AI use magnifies autonomy, ensuring you control the amplification narrative. This is through you building your own local AI, I call this an Intelligence Amplifier and Your Wisdom Keeper. I write about it offten and will write about it more. In your role, personalize AI with proprietary datasets e.g., a salesperson trains models on client interactions for predictive insights. Your context and wisdom is priceless and this is the moment it will become highly valuable. This reskilling in data literacy births future jobs like personal AI trainers, where your domain knowledge is commoditized. Nuances: Privacy tools like differential privacy mitigate risks; implications involve economic models shifting to data ownership. Philosophically, it affirms Lockean self-ownership extended to digital extensions. Psychologically, it reduces anxiety via control illusions (Langer), but edge cases include over-customization leading to echo chambers, necessitating diverse inputs. Ultimately, this empowers serial entrepreneurship, magnifying adaptability across the 5000 days.

Finally, lifelong learning loops with AI close the amplification circuit, ensuring perpetual growth. Currently, use AI tutors like Khanmigo to upskill, magnifying curiosity into expertise. This prepares for serial jobs: From analyst to inventor, each iteration builds on prior magnifications. Psychologically, it aligns with growth mindset (Dweck), turning challenges into opportunities. Philosophically, it embodies Nietzsche’s eternal recurrence, willingly embracing change. Implications: Accelerated obsolescence cycles demand agility; edge cases like information overload require mindfulness integration.

In the Hero’s Journey of the 5000 days, marshaling AI thus transforms you from participant to architect, magnifying abilities to forge not just jobs, but legacies of abundance. This is the interregnum but it is not purgatory, it is your time to transition from moment to moment ahead with grace.

And STOP HIDING. Proudly claim your tool use of AI and thrive. You have this many days…

We are on this journey together. Some of us stand on the shoulders of giants and have thought about this for decades. We will not go it alone, and I hope to build many parts to this series and share the mastermind insight from the powerful Read Multiplex member Forum: https://readmultiplex.com/forums/topic/you-have-5000-days-navigating-the-end-of-work-as-we-know-it/. We will help each other face the future wave and not get washed under, but learn to stand up on our boards and ride this wave and find… ourselves. Join us.

To continue this vital work documenting, analyzing, and sharing these hard-won lessons before we launch humanity’s greatest leap: I need your support. Independent research like this relies entirely on readers who believe in preparing wisely for our multi-planetary future. If this has ignited your imagination about what is possible, please consider donating at buy me a Coffee or becoming a member. Value for value you recieved here.

Every contribution helps sustain deeper fieldwork, upcoming articles, and the broader mission of translating my work to practical applications. Ain ‘t no large AI company supporting me, but you are, even if you just read this far. For this, I thank you.

Stay aware and stay curious,

🔐 Start: Exclusive Member-Only Content.

Membership status:

🔐 End: Exclusive Member-Only Content.

~—~

~—~

~—~

Subscribe ($99) or donate by Bitcoin.

Copy address: bc1qkufy0r5nttm6urw9vnm08sxval0h0r3xlf4v4x

Send your receipt to [email protected] to confirm subscription.

Stay updated: Get an email when we post new articles:

THE ENTIRETY OF THIS SITE IS UNDER COPYRIGHT. IMPORTANT: Any reproduction, copying, or redistribution, in whole or in part, is prohibited without written permission from the publisher. Information contained herein is obtained from sources believed to be reliable, but its accuracy cannot be guaranteed. We are not financial advisors, nor do we give personalized financial advice. The opinions expressed herein are those of the publisher and are subject to change without notice. It may become outdated, and there is no obligation to update any such information. Recommendations should be made only after consulting with your advisor and only after reviewing the prospectus or financial statements of any company in question. You shouldn’t make any decision based solely on what you read here. Postings here are intended for informational purposes only. The information provided here is not intended to be a substitute for professional medical advice, diagnosis, or treatment. Always seek the advice of your physician or other qualified healthcare provider with any questions you may have regarding a medical condition. Information here does not endorse any specific tests, products, procedures, opinions, or other information that may be mentioned on this site. Reliance on any information provided, employees, others appearing on this site at the invitation of this site, or other visitors to this site is solely at your own risk.

Copyright Notice:

All content on this website, including text, images, graphics, and other media, is the property of Read Multiplex or its respective owners and is protected by international copyright laws. We make every effort to ensure that all content used on this website is either original or used with proper permission and attribution when available.

However, if you believe that any content on this website infringes upon your copyright, please contact us immediately using our 'Reach Out' link in the menu. We will promptly remove any infringing material upon verification of your claim. Please note that we are not responsible for any copyright infringement that may occur as a result of user-generated content or third-party links on this website. Thank you for respecting our intellectual property rights.

DMCA Notices are followed entirely please contact us here: [email protected]