Add This Sentence To Your ChatGPT Prompts And Your Outputs Will Dramatically Improve.

It has been said that humans only use 10% of their brain. This turns out to be not so accurate in volume and mass, but somewhat accurate in terms of neurons. We may only use 10% of our neurons (memory) at any given time. The same can be said of Large Language Models (LLMs) like ChatGPT. We have new discoveries that show that adding a simple sentence will expand the neurons used by ChatGPT by orders of magnitude, and the results are spectacular.

What Is A Neuron?

Neurons are the fundamental building blocks of the human nervous system, responsible for processing and transmitting electrical and chemical signals throughout the body. These cells exhibit remarkable similarities to the hidden layers found in LLMs such as GPT-3.

Human neurons consist of three main components: the cell body (soma), dendrites, and an axon. The cell body contains the nucleus and other organelles necessary for cellular functions. Dendrites are branching extensions that receive signals from other neurons and transmit them towards the cell body. The axon is a long, slender projection responsible for transmitting signals away from the cell body to other neurons or effector cells.

The communication between neurons occurs through electrochemical signals. When a neuron receives an input signal, it generates an electrical impulse called an action potential. This action potential travels down the axon, where it triggers the release of neurotransmitters at specialized junctions called synapses. Neurotransmitters then cross the synaptic gap and bind to receptors on the dendrites of the connected neurons, transmitting the signal forward.

Hidden layers in LLMs play a crucial role in processing and transforming input data, much like human neurons. Let’s explore the similarities between the two.

- Neuron-like Activation: In both human neurons and LLM hidden layers, an activation function is applied to determine the output of the neuron or node. While human neurons employ complex biochemical processes, LLM hidden layers utilize mathematical functions such as the rectified linear unit (ReLU) or sigmoid functions to introduce non-linearity into the model’s computations.

- Information Integration: Neurons integrate incoming signals from multiple sources before generating an output signal. Similarly, hidden layers in LLMs aggregate information from various input nodes, applying weights and biases to compute a weighted sum. This integration facilitates the extraction of meaningful features and patterns from the input data.

- Signal Transmission: Just as neurons transmit signals through the axon and synapses, LLM hidden layers transmit information through interconnected nodes or neurons. The weights associated with these connections determine the strength of the signal transmission, much like the efficacy of synapses in human neurons.

- Learning and Adaptation: Human neurons possess the remarkable ability to adapt and modify their connections, a process known as synaptic plasticity. Similarly, LLM hidden layers undergo training processes, such as backpropagation, to adjust the weights and biases in order to improve the model’s performance. This adaptability allows both neurons and hidden layers to learn and refine their responses to various inputs.

While human neurons operate through biological mechanisms, hidden layers in LLMs leverage mathematical computations to process and transform input data. Understanding the parallels between these two systems can provide insights into the design and functioning of both biological and artificial neural networks.

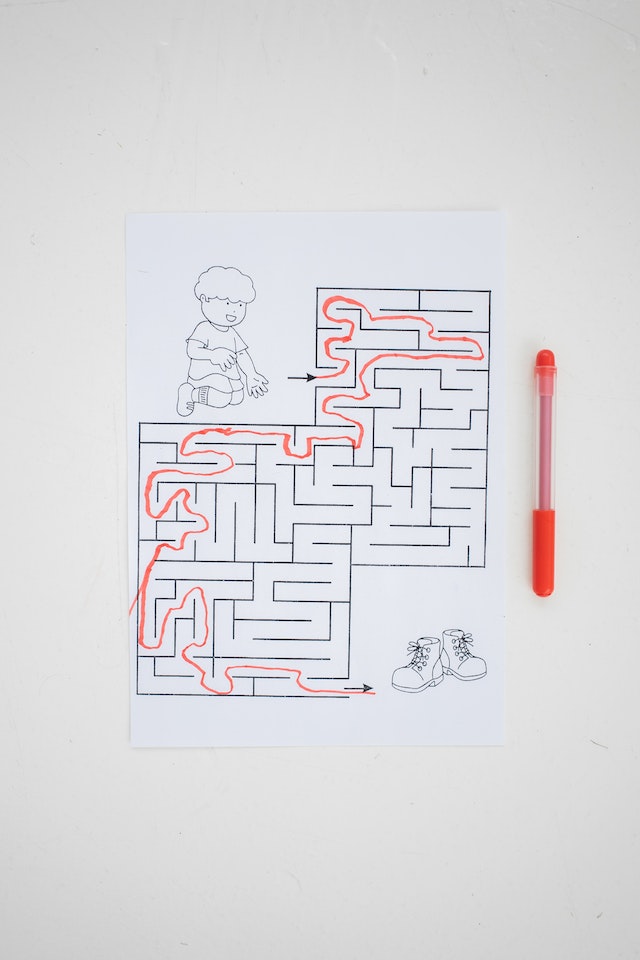

Simple Questions Create Simple Outputs

We know that simple questions produce simple outputs with LLMs like ChatGPT. This is the typical “Question/Answer” approach, called Input/Output (IO) prompts. The results using this technique will always be the simplest path through the parametric data used to build the LLM. Mathematically, it is statistically the simplest path through the transformer system. If you have a very simple question, this will be ok.

However, in this IO prompting approach, even if you use follow-up questions, you will be on a “neuro pathway” that will have diminishing returns. This one of the 1000s of reasons I invented SuperPrompting. In relationship to “neuro pathway” it is vital we “activate” pathways that are in the model but not statically on the central path. The results are robustly better than simple IO type prompts.

One aspect of a SuperPrompt is the ingredients we use to make it work. There are 1000s of parameters to consider. However, one has parameter, an exact sentence at the top of many of my SuperPrompts allows for the LLM to get consistently better outputs. And now a new university paper has been published that shows this to be confirmed empirically through their research also.

In this member only article, we will explore this simple sentence added to a SuperPrompt and demonstrate why and how it works. These are parts of the tools we use in Prompt Engineering, and it makes those that believe this is not a profession look quite uneducated and misinformed. There are so many powerful ways to prompt AI for great, if not believed, impossible outputs and this is just one.

If you are a member, thank you. If you are not yet a member, join us by clicking below.

🔐 Start: Exclusive Member-Only Content.

Membership status:

🔐 End: Exclusive Member-Only Content.

~—~

~—~

~—~

Subscribe ($99) or donate by Bitcoin.

Copy address: bc1q9dsdl4auaj80sduaex3vha880cxjzgavwut5l2

Send your receipt to Love@ReadMultiplex.com to confirm subscription.

IMPORTANT: Any reproduction, copying, or redistribution, in whole or in part, is prohibited without written permission from the publisher. Information contained herein is obtained from sources believed to be reliable, but its accuracy cannot be guaranteed. We are not financial advisors, nor do we give personalized financial advice. The opinions expressed herein are those of the publisher and are subject to change without notice. It may become outdated, and there is no obligation to update any such information. Recommendations should be made only after consulting with your advisor and only after reviewing the prospectus or financial statements of any company in question. You shouldn’t make any decision based solely on what you read here. Postings here are intended for informational purposes only. The information provided here is not intended to be a substitute for professional medical advice, diagnosis, or treatment. Always seek the advice of your physician or other qualified healthcare provider with any questions you may have regarding a medical condition. Information here does not endorse any specific tests, products, procedures, opinions, or other information that may be mentioned on this site. Reliance on any information provided, employees, others appearing on this site at the invitation of this site, or other visitors to this site is solely at your own risk.

Copyright Notice:

All content on this website, including text, images, graphics, and other media, is the property of Read Multiplex or its respective owners and is protected by international copyright laws. We make every effort to ensure that all content used on this website is either original or used with proper permission and attribution when available.

However, if you believe that any content on this website infringes upon your copyright, please contact us immediately using our 'Reach Out' link in the menu. We will promptly remove any infringing material upon verification of your claim. Please note that we are not responsible for any copyright infringement that may occur as a result of user-generated content or third-party links on this website. Thank you for respecting our intellectual property rights.

VERY interesting research and work Brian. Thanks for sharing this. I am too busy at the moment to give this the time it needs, but the concepts here are very interesting.

Thanks.