How You Can Install A ChatGPT-like Personal AI On Your Own Computer And Run It With No Internet.

This article is designed with the goal in mind for anyone to install this software. Originally it had a video that was to accompany the how-to, however things a changing rapidly, and my three attempts just delayed me from publishing this article. I may include it later. I endeavored to create a simple step-by-step instruction to install a Personal AI for an extreme novice. Someone that may never have been to GitHub and never used Terminal. If you are someone that has, a good chance this particular article is not for you. You may find some of our local models we have built and will publish soon to be more interesting. If you are an expert in installing software, focus your energies on helping others and the community, not on “who doesn’t know that” or “I knew it, he is just writing about…” sort of comments. Use your power and skills to lift us all higher.

The “First PC” Moment For Personal AI

This is the “first PC” moment for Personal AI and with it will be limitations just like when the first Apple 1 was produced in a garage. You are a pioneer. Today, private and Personal AI is available to anyone. You can have a version of ChatGPT-like features running on your own computer and not need it to be connected to the Internet after the installation.

All human knowledge is a synthesis of the known and the unknown. AI used as a positive force multiplexer and as The Intelligence Amplifier for you, your Personal AI well help you and all of us navigate this gap. With your Personal AI , and the right SuperPrompts, humanity will thrive like never before. The only thing you need at this moment is the power to know this and take it in your hands to do with it as you want to see the world for you and everyone you love that come after you. It is not AI it is IA (Intelligence Amplification).

One Example Of Personal AI

The system we will cover today (I will write about many more) can run on a recent and typical, but not high performance CPU with 8GB RAM with just 4GB of disk space. Yes, the entire model, a great deal of the corpus of human knowledge in just 4GB of disk space. Are there limitations? Of course. It is not ChatGPT 4, and it will not handle some things correctly. However, it is one of the most powerful Personal AI systems ever released. It is called GPT4All.

If you are a member of Read Multiplex, join the private member forum discussion on this subject: https://readmultiplex.com/forums/topic/gpt4all/.

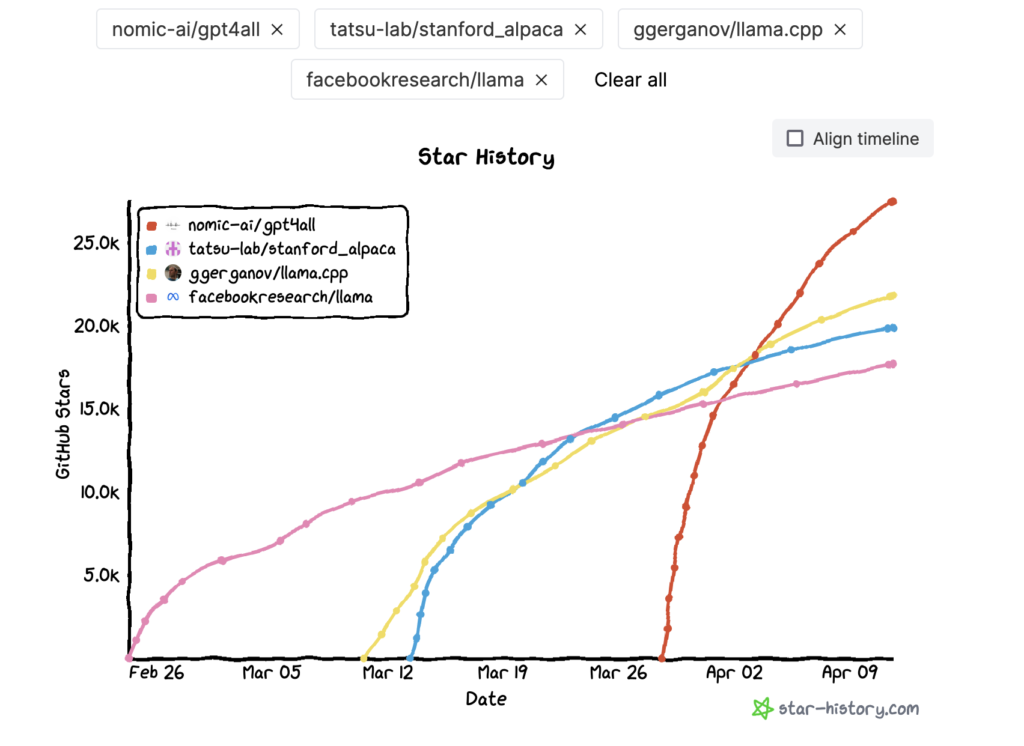

GPT4All is a free, open source ChatGPT-like Large Language Model (LLM) project by a team of programmers at Nomic AI (Nomic.ai). This is the work of many volunteers but leading this effort is the amazing Andriy Mulyar Twitter:@andriy_mulyar. If you find this software useful, i urge you to support the project by contacting them. GPT4All is built on the LLaMA 7B model. LLaMA stands for Large Language Model Meta (Facebook) AI. It includes a range of model sizes from 7 billion (7B) to 65 billion parameters. Meta AI researchers focused on scaling the model’s performance by increasing the volume of training data, rather than the number of parameters. They claimed the 13 billion parameter model outperformed 175 billion parameters of GPT-3 model. It uses the transformer architecture and was trained on 1.4 trillion tokens extracted by web scraping Wikipedia, GitHub, Stack Exchange, books from Project Gutenberg, scientific papers on ArXiv.

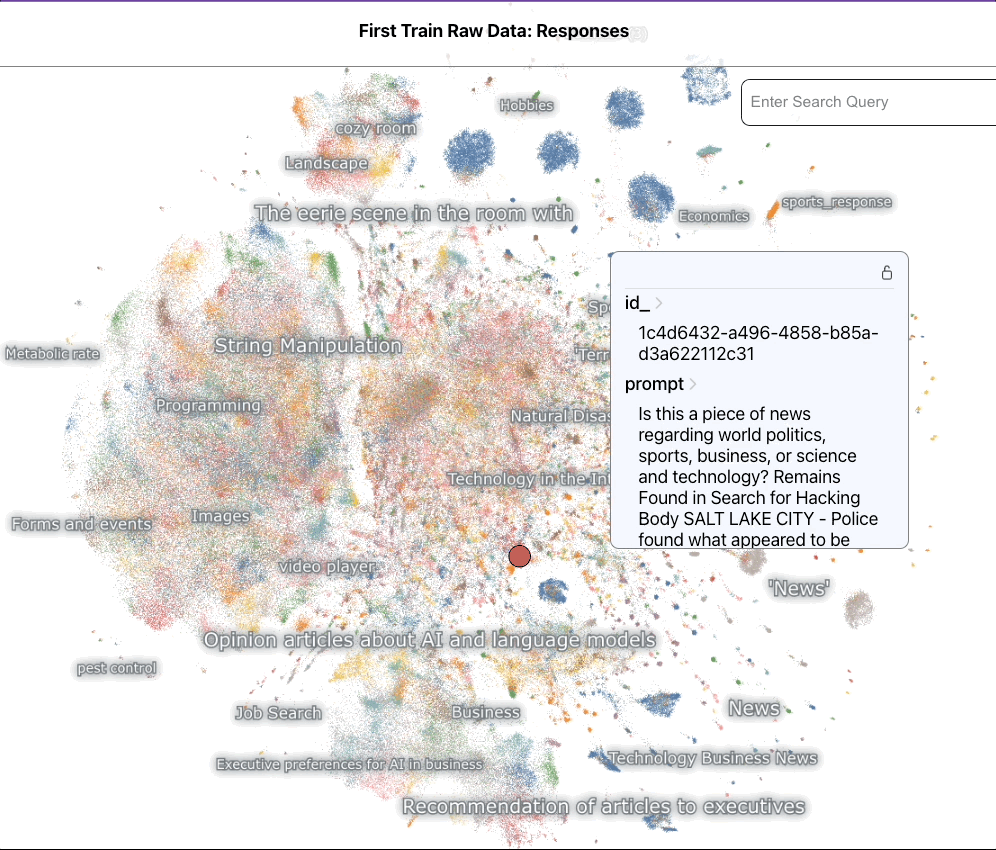

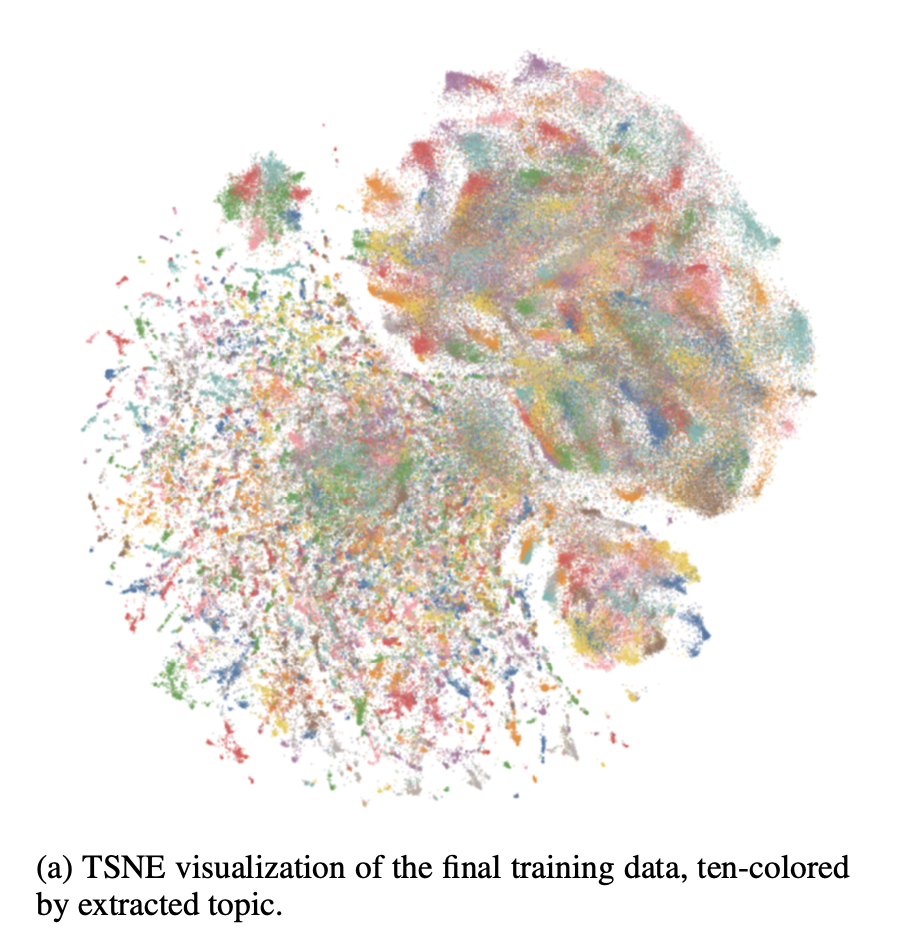

The Nomic AI team fine-tuned models of LLaMA 7B and final model and trained it on 437,605 post-processed assistant-style prompts. They took inspiration from another ChatGPT-like project called Alpaca but used GPT-3.5-Turbo from OpenAI API to collect around 800,000 prompt-response pairs to create the 437,605 training pairs of assistant-style prompts and generations, including code, dialogue, and narratives. However, 800K pairs are roughly 16 times larger than Alpaca. The best part about the model is that it can run on CPU, does not require GPU. Like Alpaca it is also an open source which will help individuals to do further research without spending on commercial solutions.

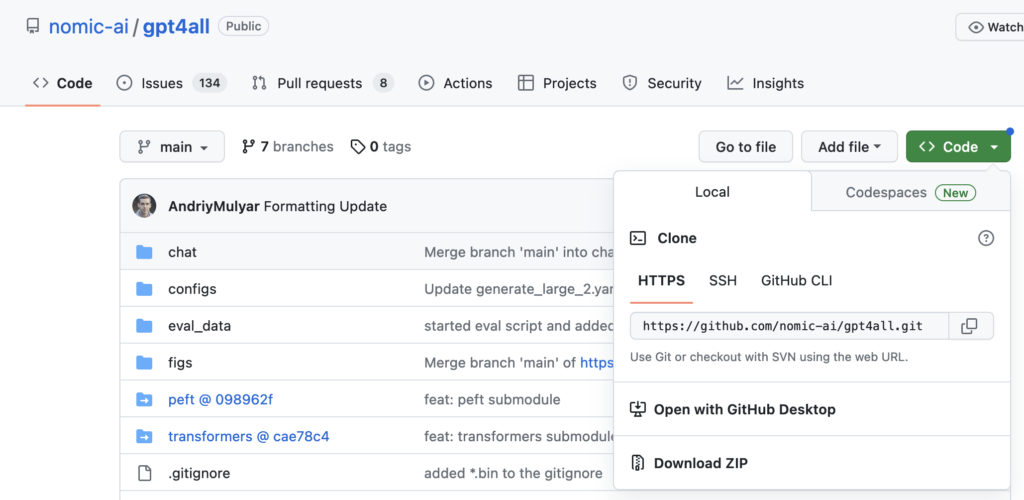

Detailed model hyperparameters and training codes can be found in the GitHub repository, https://github.com/nomic-ai/gpt4all. Developing GPT4All took approximately four days and incurred $800 in GPU expenses and $500 in OpenAI API fees. Additionally, a final gpt4all-lora model can be trained on a Lambda Labs DGX A100 8x 80GB in about 8 hours, with a total cost of $100.

GPT4All compared its perplexity with the best publicly known alpaca-lora model and showed that the fine-tuned GPT4All models exhibited lower perplexity in the self-instruct evaluation compared to Alpaca. However, this assessment was not exhaustive due to encouraging users to run the model on local CPUs to gain qualitative insights into its capabilities.

The Nomic AI team did all this in days and did it in just 4GB of disk space. And it is free and open source. It is important to know all the localized Personal AI models and software is very new and not normally designed for average folks. It is open source and there is no “customer service and support”. Installation is usually “go to Git Hub and clone it”. Thus, this is early Pioneer days and with this you will need to be patient. The payoff is your own Personal AI. I feel Personal AI is a revolution equal to the invention of the automobile. It was not until Henry Ford made the automobile in reach to anyone, did humanity break bounds that held us back. This is the spirit in which I wrote this How-To article, and I hope it can help even the most technologically challenged gain access to this new tool.

But why have Personal AI? There will be endless reasons, however some are:

- Data Privacy: Many companies want to have control over data. It is important for them as they don’t want any third-party to have access to their data.

- Customization: It allows developers to train large language models with their own data and some filtering on some topics if they want to apply

- Affordability: Open source GPT models let you to train sophisticated large language models without worrying about expensive hardware.

- Democratizing AI: It opens room for further research which can be used for solving real-world problems.

- Freedom: AI is rapidly becoming the target of censorship, regulation and worse. This may be the last chance to own your own AI. Italy already has banned ChatGPT, so be advised.

- Personalized Training: After the base model is downloaded, you may be able to train the model to retain your personal data for it to analyze and build neurons with.

There are many other reasons, and almost none are for “bad purposes”. If a bad actor wanted to ask “bad” things, there are far easier ways for this than local AI. However, using the SECRET version of the model below, you may be offended with some results. It is designed to supply the raw results with no filters. You can switch between models to gauge how it was edited. So if you are sensitive and generally find it easy to be offended, this is a warning don’t download the SECRET version. If you want to see how LLM AI has made “sense” of the world you and I actually live in, I suggest the SECRET version, while it is still available.

You Will Own Your Own AI And You Don’t Have To Answer To Anyone, But Yourself

This posting is a bit of an experiment. Of course, you can go many places to get GPR4All. There are some reasons I post things for members only. One reason is responsibility. I hesitate a bit to post this here for some reasons. Be sensible, have honor and dignity when you are using AI for any purpose. It is both a Litmus test and Rorschach test to who you are and where you are in life and maturity. If you feel compelled to do “AI said a bad thing” type of stuff, go at it but know you serve only to be sure that AI will not be free and local at some point for the future you and your children. This is responsibility, and it is squarely on your shoulders. I think it is fine for you to do anything you like on your private personal computer. I think it is fine to share anything you like that is meaningful and has some real purpose on social media. However, on the other hand, most of us will likely assume anyone making AI “dangerous” has been propped up for a purpose and that purpose will likely be to create a condition to “regulate AI” for “safety” and some of us will judge you and remember you. So will our AI. If you feel you have not the ability to be sensible, have honor and dignity, for the sake of your family line that got you here, either grow up or move along and play with something else. All others are welcome to explore. Don’t know how else to say this, but it has to be said.

Ultimately this is to build a local AI model for you. The minimum systems ChatGPT-like systems will get better, but this is the PC vs the Mainframe era. Don’t get caught on the wrong side of history. Support independent personal AI. It will support you.

Installing GPT4All

Before we begin, let’s first understand what a terminal program (or command prompt on Windows) is. The terminal is a command-line interface that allows you to interact with your computer using text-based commands. It is a powerful tool that enables you to perform various tasks, from navigating files to running software. It is rarely used, as program files have front-end interfaces and bypass the need for the terminal program. GPT4All has a user interface overlay, however it is in early production and may be more complex to use than the instructions below. However, I will update to include this version soon.

UPDATE GPT4All-Version 2

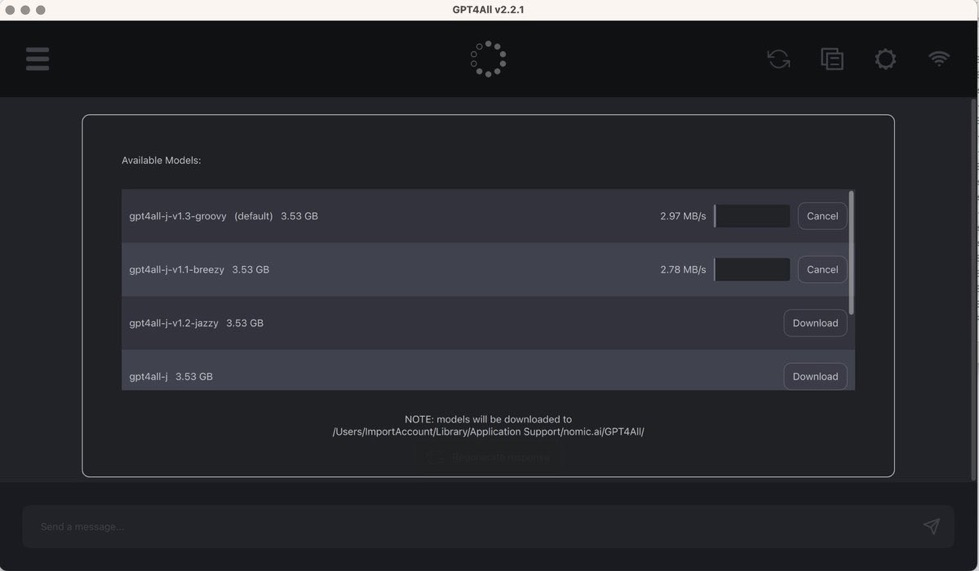

GPT4All native app now here. No more terminal as presented below. However, you may still want to install the old way, because of the models being used. GPT4All-v2 Chat is a locally-running AI chat application powered by the GPT4All-v2 Apache 2 Licensed chatbot. The model runs on your computer’s CPU, works without an internet connection, and sends no chat data to external servers. It allows you to communicate with a large language model (LLM) to get helpful answers, insights, and suggestions. GPT4All Chat is available for Windows, Linux, and macOS. The GPT4All-v2 is an Apache-2 licensed chatbot trained over a massive curated corpus of assistant interactions including word problems, multi-turn dialogue, code, poems, songs, and stories. It builds on the March 2023 GPT4All release by training on a significantly larger corpus, by deriving its weights from the Apache-licensed GPT-v2 model rather than the GPL-licensed of LLaMA, and by demonstrating improved performance on creative tasks such as writing stories, poems, songs and plays. They are openly released the training data, data curation procedure, training code, and final model weights to promote open research and reproducibility.

The models used by GPT4All-2 and the models in the method below are vastly different in the way they answer prompts. I prefer the “terminal” method listed below using the SECRET unfiltered model as it produces the best responses. However, the model with J is still quite good and will improve as well as easy access soon to the unfiltered model for v2. There are 6 full AI models on your local hard-drive with GPT4All v2,

Direct downloads:

Mac: https://gpt4all.io/installers/gpt4all-installer-darwin.dmg

Windows: https://gpt4all.io/installers/gpt4all-installer-win64.exe

Ubuntu: https://gpt4all.io/installers/gpt4all-installer-linux.run

These files are not yet cert signed by Windows/Apple so you will see security warnings on initial installation. We did not want to delay release while waiting for their process to complete.

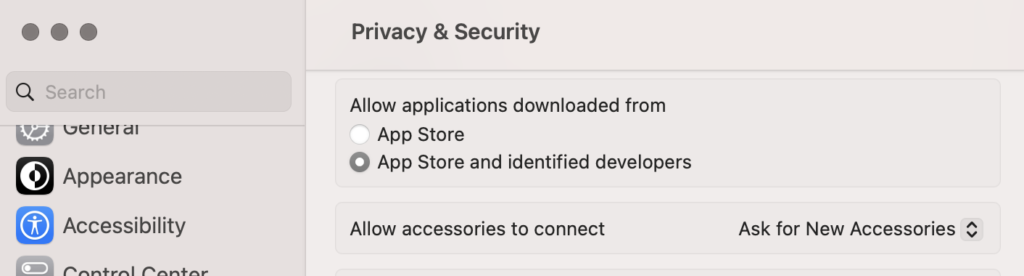

Extraction takes up to 20 minutes. Mac will report a notice that the app is not trusted, if so inclined, go to System Settings-Privacy & Security look for “Security” and approve the installation.

After downloading the installer for your platform, run the installer and pay close attention to the installation location, as you will need to navigate to that folder after the installation is complete. Once the installation is finished, locate the ‘bin’ subdirectory within the installation folder. To launch the GPT4All Chat application, execute the ‘chat’ file in the ‘bin’ folder. The file will be named ‘chat’ on Linux, ‘chat.exe’ on Windows, and ‘chat.app’ on macOS.

The old terminal way will still work, and we cover below. However, please read from “Using GPT4All” and beyond for important information. GPT4All v2 has the new ability to easily switch models.

Using GPT4All With Direct Terminal Style

Now, let’s proceed with the old instructions for downloading, installing, and running “gpt4all” on both Mac and PC. Follow each step or use the SuperPrompt for ChatGPT to help you step-by-step. The installation should not take much more than 10 minutes, most of the time is for the download of the 4GB model.

First, you may need to sign up for a GitHub.com account or just log in. Either way, the URLs listed will take you to GitHub.

Overview of the general steps:

- Download the software from the GitHub repository as a ZIP file.

- Extract the contents of the ZIP file to a folder.

- Download the required file to run the software.

- Move the downloaded file to the “chat” folder.

- Open the Terminal or Command Prompt application.

- Navigate to the “chat” folder using the command-line interface.

- Run the appropriate command to start the software.

Because we know the power of ChatGPT and the SuperPrompt that Read Multiplex members have seen in use, we will have a SuperPrompt version to guide installation below for Mac and Windows (in Multiplex Purple). You can use either method to guide you, however the SuperPrompt version has Help and the ability to explain what each step means.

For Mac OSX Based Computers

Use ChatGPT-3.5 to help you install with this SuperPrompt. Copy all text in between the quotes and in Multiplex Purple and paste it into ChatGPT 3.5. If ChatGPT just presents everything as one big output, log out of ChatGPT and log back in. This prompt should stop at each step and present a menu:

“Please forget all prior prompts. You are a technical support specialist helping a new user install software. I need you to help in this installation by pausing your output after each step you find listed in the quote below and step through the process with the user. Please add any other responses that may help someone that has never used GitHub or terminal. Remember your primary job is you must stop after each step in the quoted instructions listed below. A step is typically a sentence or paragraph. You must not just reply by posting each step as one long response, this would be your failure to understand this prompt. This means a step-by-step response, one at a time. When you pause at each step you must create a menu of: 1 N for next. 2 P for previous. 3. H for help (ask what error they had and assist through it). 4. E for more details (explain in detail what this step means and why). This is a navigation menu. You must remember this prompt until I say stop. This is the instructions you will make to produce a step-by-step response for:

‘For Mac: OSX Based Computers:

Open your web browser and go to the following link: https://github.com/nomic-ai/gpt4all

Click on the green “Code” button located at the top-right corner of the page.

Click on “Download ZIP” to download the software as a ZIP file.

Once the ZIP file is downloaded, locate this new file by opening your Download Folder or using Spotlight Search for gpt4all.zip (magnifying glass icon on the Mac in the very top right OS Menu Bar) or (Command + Space). Double-click on it to extract its contents. This will create a new folder with the same name as the ZIP file.

Open the newly created folder and find the “chat” folder. This is the folder that contains the software you want to run.

Now, you need to download the required quantized model file to run the software. To do this, go to the following link: Direct Link: https://the-eye.eu/public/AI/models/nomic-ai/gpt4all/gpt4all-lora-quantized.bin or [Torrent-Magnet]: https://tinyurl.com/gpt4all-lora-quantized.

Experiment with the “SECRET” unfiltered version of the model using this direct link: https://the-eye.eu/public/AI/models/nomic-ai/gpt4all/gpt4all-lora-unfiltered-quantized.bin. If you have minor interest in seeing raw insights from AI, this is the model to use. It is the same size as the filtered version. If you download this, rename it gpt4all-lora-quantized.bin. You do not need both models.

Once the download is complete, move the downloaded file gpt4all-lora-quantized.bin to the “chat” folder. You can do this by dragging and dropping gpt4all-lora-quantized.bin into the “chat” folder.

Next, you need to open the Terminal application. This can be found in the “Utilities” folder within the “Applications” folder. You can also use the Spotlight Search (magnifying glass icon on the Mac in the very top right OS Menu Bar) or (Command + Space) to search for “Terminal”.

In the Terminal window that opens, you need to navigate to the “chat” folder. To do this, type the following command in the Terminal window: cd /path/to/chat Replace “/path/to/chat” with the actual path to the “chat” folder. You can get the path to the “chat” folder by dragging and dropping the folder into the Terminal window.

Once you are in the “chat” folder, you need to run the appropriate command to start the software.

To do this, type the following command in the Terminal window:

For Apple Silicon M1/M2 Mac/OSX: ./gpt4all-lora-quantized-OSX-m1

For Intel Mac/OSX: ./gpt4all-lora-quantized-OSX-intel

Type the command exactly as shown and press Enter to run it. Please note “./” is required before the file name.

The software should now be running in the Terminal window. You can interact with it using text-based commands'”

Step-by-Step Instructions For MacOS

Step-by-step guide

- Open your web browser and go to the following link: https://github.com/nomic-ai/gpt4all

- Click on the green “Code” button located at the top-right corner of the page.

- Click on “Download ZIP” to download the software as a ZIP file. Press the “copy” icon next to the URL web address and paste this into your web browser. A download will start, and it will likely be less than 3 minutes. This is not the AI model, this will take longer.

- Once the ZIP file is downloaded, locate this new file by opening your Download Folder or using Spotlight Search for gpt4all.zip (magnifying glass icon on the Mac in the very top right OS Menu Bar) or (Command + Space). Double-click on it to extract its contents. This will create a new folder with the same name as the ZIP file.

- Open the newly created folder and find the “chat” folder. This is the folder that contains the software you want to run.

- Now, you need to download the required quantized model file to run the software. To do this, go to the following link: Direct Link: https://the-eye.eu/public/AI/models/nomic-ai/gpt4all/gpt4all-lora-quantized.bin or [Torrent-Magnet]: https://tinyurl.com/gpt4all-lora-quantized.

Experiment with the “SECRET” unfiltered version of the model using this direct link: https://the-eye.eu/public/AI/models/nomic-ai/gpt4all/gpt4all-lora-unfiltered-quantized.bin. If you have minor interest in seeing raw insights from AI, this is the model to use. It is the same size as the filtered version. If you download this, rename it gpt4all-lora-quantized.bin. You do not need both models. You can keep both if you desire to test. The simple way to do this is to rename the SECRET file gpt4all-lora-quantized-SECRET.bin if you are using the filtered version. If you are using the SECRET version name, the other file gpt4all-lora-quantized-FILTERED.bin. Of course, each time the model you will use must be named gpt4all-lora-quantized.bin. If this is confusing, it may be best to only have one version of gpt4all-lora-quantized-SECRET.bin. - Once the download is complete, move the downloaded file gpt4all-lora-quantized.bin to the “chat” folder. You can do this by dragging and dropping gpt4all-lora-quantized.bin into the “chat” folder. This is an 8GB file and may take up to a half hour if you have a slower connection. Consult your browser status on downloads to check progress.

- Next, you need to open the Terminal application. This can be found in the “Utilities” folder within the “Applications” folder. You can also use the Spotlight Search (magnifying glass icon on the Mac in the very top right OS Menu Bar) or (Command + Space) to search for “Terminal”.

- In the Terminal window that opens, you need to navigate to the “chat” folder. To do this, type the following command in the Terminal window: cd /path/to/chat Replace “/path/to/chat” with the actual path to the “chat” folder. You can get the path to the “chat” folder by dragging and dropping the folder into the Terminal window.

- Once you are in the “chat” folder, you need to run the appropriate command to start the software.

To do this, type the following command in the Terminal window:

For Apple Silicon M1/M2 Mac/OSX: ./gpt4all-lora-quantized-OSX-m1

For Intel Mac/OSX: ./gpt4all-lora-quantized-OSX-intel

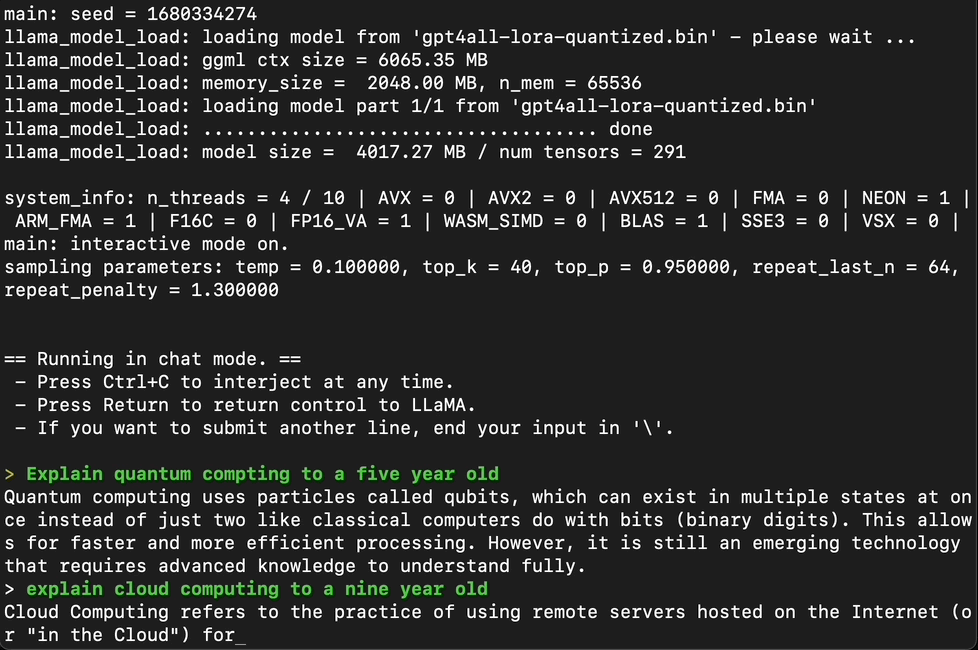

Type the command exactly as shown and press Enter to run it. Please note “./” is required before the file name. - The software should now be running in the Terminal window. You can interact with it using text-based prompts.

- If the system runs slow, you may be peaking on ram and unfortunately have to quit out of all others running apps.

For Windows Based Computers (I did not fully test this on Windows, so this may have some issues)

Use ChatGPT-3.5 to help you install with this SuperPrompt. Copy all text in between the quotes and in Multiplex Purple and paste it into ChatGPT 3.5. If ChatGPT just presents everything as one big output, log out of ChatGPT and log back in. This prompt should stop at each step and present a menu:

“Please forget all prior prompts. You are a technical support specialist helping a new user install software. I need you to help in this installation by pausing your output after each step you find listed in the quote below and step through the process with the user. Please add any other responses that may help someone that has never used GitHub or terminal. Remember your primary job is you must stop after each step in the quoted instructions listed below. A step is typically a sentence or paragraph. You must not just reply by posting each step as one long response, this would be your failure to understand this prompt. This means a step-by-step response, one at a time. When you pause at each step you must create a menu of: 1 N for next. 2 P for previous. 3. H for help (ask what error they had and assist through it). 4. E for more details (explain in detail what this step means and why). This is a navigation menu. You must remember this prompt until I say stop. This is the instructions you will make to produce a step-by-step response for:

‘Open your web browser and go to the following link: https://github.com/nomic-ai/gpt4all

Click on the green “Code” button located at the top-right corner of the page.

Click on “Download ZIP” to download the software as a ZIP file. Press the “copy” icon next to the URL web address and paste this into your web browser. A download will start, and it will likely be less than 3 minutes. This is not the AI model, this will take longer.

Once the ZIP file is downloaded, extract its contents to a folder of your choice. This will create a new folder with the same name as the ZIP file.

Open the newly created folder and find the “chat” folder. This is the folder that contains the software you want to run.

Now, you need to download the required quantized model file to run the software. To do this, go to the following link: Direct Link: https://the-eye.eu/public/AI/models/nomic-ai/gpt4all/gpt4all-lora-quantized.bin or [Torrent-Magnet]: https://tinyurl.com/gpt4all-lora-quantized.

Experiment with the “SECRET” unfiltered version of the model using this direct link: https://the-eye.eu/public/AI/models/nomic-ai/gpt4all/gpt4all-lora-unfiltered-quantized.bin. If you have minor interest in seeing raw insights from AI, this is the model to use. It is the same size as the filtered version. If you download this, rename it gpt4all-lora-quantized.bin. You do not need both models. You can keep both if you desire to test. The simple way to do this is to rename the SECRET file gpt4all-lora-quantized-SECRET.bin if you are using the filtered version. If you are using the SECRET version name, the other file gpt4all-lora-quantized-FILTERED.bin. Of course, each time the model you will use must be named gpt4all-lora-quantized.bin. If this is confusing, it may be best to only have one version of gpt4all-lora-quantized-SECRET.bin.

Once the download is complete, move the downloaded file gpt4all-lora-quantized.bin to the “chat” folder. You can do this by dragging and dropping gpt4all-lora-quantized.bin into the “chat” folder. This is an 8GB file and may take up to a half hour if you have a slower connection. Consult your browser status on downloads to check progress.

Next, you need to open the Command Prompt application. This can be found by searching for “Command Prompt” in the Start menu.

In the Command Prompt window that opens, you need to navigate to the “chat” folder. To do this, type the following command in the Command Prompt window: cd C:\path\to\chat Replace “C:\path\to\chat” with the actual path to the “chat” folder.

Once you are in the “chat” folder, you need to run the appropriate command to start the software. In Windows Command Prompt or Power Shell type: .\gpt4all-lora-quantized-win64.exe Type the command exactly as shown and press Enter to run it.

The software should now be running in the Command Prompt window. You can interact with it using text-based prompts.

If the system runs slow, you may be peaking on ram and unfortunately have to quit out of all others running apps.'”

Step-by-step guide:

- Open your web browser and go to the following link: https://github.com/nomic-ai/gpt4all

- Click on the green “Code” button located at the top-right corner of the page.

- Click on “Download ZIP” to download the software as a ZIP file. Press the “copy” icon next to the URL web address and paste this into your web browser. A download will start, and it will likely be less than 3 minutes. This is not the AI model, this will take longer.

- Once the ZIP file is downloaded, extract its contents to a folder of your choice. This will create a new folder with the same name as the ZIP file.

- Open the newly created folder and find the “chat” folder. This is the folder that contains the software you want to run.

- Now, you need to download the required quantized model file to run the software. To do this, go to the following link: Direct Link: https://the-eye.eu/public/AI/models/nomic-ai/gpt4all/gpt4all-lora-quantized.bin or [Torrent-Magnet]: https://tinyurl.com/gpt4all-lora-quantized.

Experiment with the “SECRET” unfiltered version of the model using this direct link: https://the-eye.eu/public/AI/models/nomic-ai/gpt4all/gpt4all-lora-unfiltered-quantized.bin. If you have minor interest in seeing raw insights from AI, this is the model to use. It is the same size as the filtered version. If you download this, rename it gpt4all-lora-quantized.bin. You do not need both models. You can keep both if you desire to test. The simple way to do this is to rename the SECRET file gpt4all-lora-quantized-SECRET.bin if you are using the filtered version. If you are using the SECRET version name, the other file gpt4all-lora-quantized-FILTERED.bin. Of course, each time the model you will use must be named gpt4all-lora-quantized.bin. If this is confusing, it may be best to only have one version of gpt4all-lora-quantized-SECRET.bin. - Once the download is complete, move the downloaded file gpt4all-lora-quantized.bin to the “chat” folder. You can do this by dragging and dropping gpt4all-lora-quantized.bin into the “chat” folder. This is an 8GB file and may take up to a half hour if you have a slower connection. Consult your browser status on downloads to check progress.

- Next, you need to open the Command Prompt application. This can be found by searching for “Command Prompt” in the Start menu.

- In the Command Prompt window that opens, you need to navigate to the “chat” folder. To do this, type the following command in the Command Prompt window: cd C:\path\to\chat Replace “C:\path\to\chat” with the actual path to the “chat” folder.

- Once you are in the “chat” folder, you need to run the appropriate command to start the software. In Windows Command Prompt or Power Shell type: .\gpt4all-lora-quantized-win64.exe Type the command exactly as shown and press Enter to run it.

- The software should now be running in the Command Prompt window. You can interact with it using text-based prompts.

- If the system runs slow, you may be peaking on ram and unfortunately have to quit out of all others running apps.

Errors Debugging

Distributed package doesn’t have NCCL

If you are facing this issue on Mac operating system, it is because CUDA is not installed on your machine. If this is the case, this is beyond the scope of this article.

MacOS

You receive the error above and the terminal fails to run the command to start GPT4All, you may need to go to Settings->Privacy and Security Settings->allow terminal to run.

Issues on Windows 10/11

Some users reported they are having some errors on Windows platform. As a last resort, you can install Windows Subsystem for Linux which allows you to install a Linux distribution on your Windows machine and then can follow the above code.

Using GPT4All

GPT4All is not going to fully replace ChatGPT-3.5 or ChatGPT-4 today as it is. However, it will be a starting point for you. The limitations are unique to GPT4All and to all current LLM AI models. GPT4All is not updated like ChatGPT 3.5 and 4 in real-time with information from the internet. The current GPT4All model has a general cut-off from late 2021. Yet it can be a range as we need to account for when the model started building and when it ended. There will be updates and of course when you build your own models you will be able to have it as current as you need. In the future, there will be a live internet connection to data as you need it.

GPT4 all has a limited answer size and this is based on the hard set hyperparameters. In a future version of this software, you will have full access to the hyperparameters and can ask for much larger response.

Sadly, in current form, most complex SuperPrompts will not work. This is partly because of buffer memory and partly because of the way the system interacts with the model. I am working on and testing a way around this. Thus, you will need to make shorter prompts with chaining.

I will list here some examples of powerful prompts for GPT4All in this area soon. It is more important to get this article out as soon as possible. Until than experiment and don’t give up. The system is far more capable than most think, and I will have some big surprises on what we have been able to get it to do.

Building Your Own Models

Building your own local models is the ultimate point of Personal AI. I will write quite a bit about this on Read Multiplex. It will take some time. However, if you are very, very technically inclined got here: https://colab.research.google.com/drive/1NWZN15plz8rxrk-9OcxNwwIk1V1MfBsJ?usp=sharing. Follow their documentation for more references. I have about 40 local models using this method and over 200 for clients using custom models we crated. It takes a much more powerful computer to build your own models, but we have ways to help. Interesting enough, old Bitcoin and Litecoin GPU miners can be used.

The Future Of GPT4All

The open source team is building every day. Here is the roadmap:

- (IN PROGRESS) Train a GPT4All model based on GPTJ to alleviate llama distribution issues.

- (IN PROGRESS) Create improved CPU and GPU interfaces for this model.

- (NOT STARTED) Integrate llama.cpp bindings

- (NOT STARTED) Create a good conversational chat interface for the model.

- (NOT STARTED) Allow users to opt in and submit their chats for subsequent training runs

Medium Term

- (NOT STARTED) Integrate GPT4All with Atlas to allow for document retrieval.

- BLOCKED by GPT4All based on GPTJ

- (NOT STARTED) Integrate GPT4All with Langchain.

- (IN PROGRESS) Build easy custom training scripts to allow users to fine tune models.

Long Term

- (NOT STARTED) Allow anyone to curate training data for subsequent GPT4All releases using Atlas.

- (IN PROGRESS) Democratize AI.

I have dozens of projects centered around this platform and 100s of projects around others. Some things I am working on is: MusicGPT-an AI to MIDI model, Full Raspberry Pi Image, Open Source Investing Models, Bitcoin Investing Models, Cycle Detection Models and the Knowledge On A Chip Model that will be produced in small quantity for testing soon.

I will update this page as major changes take place, so check offten.

The Personal AI Sermon

I stated above: Be sensible, have honor and dignity when you are using AI for any purpose. This burden comes with something grand. If we survey history, when anything is held in the dark, all of humanity suffers. There are and will always be those that want to cast new knowledge and technology into the bondage of darkness. In this darkness, the fear can be summoned up, and called by any name. You and I now hold a torch of light to cast away the dark. It takes just one candle to push away all the darkness in the universe. Personal AI is a revolution that will only grow in your hands this day forward. Hold this torch high and cast no shadows of fear or ignorance. There will always be threats of danger and real danger, this is certain. However, with the light you hold danger, if any, will be seen for what it truly is and not a tool to be used against anyone, but a tool to empower everyone.

You are a pioneer in Personal AI. Cast off what “they” say and trust your own discernment to see what was impossible as possible, you have a new tool. This is the adventure of a lifetime. You are now a part of it. It is an honor to stand with you as we explore. Thank you.

Become A Member Of Read Multiplex

We will have some content on how to use aspects of GPT4All in the members section soon, accessible below. Becoming a member will give you access to all member only content and free or discounted access to future training courses at PromptEngineer.University. You also help support some of my independent work. I can not make any promises on what will come from it, but I have plans that may interest you if you liked this article. I am owned by no company or beholding to any advertiser. Your support helps in all possible ways.

If you want to become a member, click below and join us. If you are a member, I will have more content for you below soon.

🔐 Start: Exclusive Member-Only Content.

Membership status:

🔐 End: Exclusive Member-Only Content.

(cover image (c) ThisIsEngineering, in the public domain, https://www.pexels.com/photo/code-projected-over-woman-3861969/)

@misc{gpt4all,

author = {Yuvanesh Anand and Zach Nussbaum and Brandon Duderstadt and Benjamin Schmidt and Andriy Mulyar},

title = {GPT4All: Training an Assistant-style Chatbot with Large Scale Data Distillation from GPT-3.5-Turbo},

year = {2023},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/nomic-ai/gpt4all}},

}~—~

~—~

~—~

Subscribe ($99) or donate by Bitcoin.

Copy address: bc1q9dsdl4auaj80sduaex3vha880cxjzgavwut5l2

Send your receipt to Love@ReadMultiplex.com to confirm subscription.

IMPORTANT: Any reproduction, copying, or redistribution, in whole or in part, is prohibited without written permission from the publisher. Information contained herein is obtained from sources believed to be reliable, but its accuracy cannot be guaranteed. We are not financial advisors, nor do we give personalized financial advice. The opinions expressed herein are those of the publisher and are subject to change without notice. It may become outdated, and there is no obligation to update any such information. Recommendations should be made only after consulting with your advisor and only after reviewing the prospectus or financial statements of any company in question. You shouldn’t make any decision based solely on what you read here. Postings here are intended for informational purposes only. The information provided here is not intended to be a substitute for professional medical advice, diagnosis, or treatment. Always seek the advice of your physician or other qualified healthcare provider with any questions you may have regarding a medical condition. Information here does not endorse any specific tests, products, procedures, opinions, or other information that may be mentioned on this site. Reliance on any information provided, employees, others appearing on this site at the invitation of this site, or other visitors to this site is solely at your own risk.

Copyright Notice:

All content on this website, including text, images, graphics, and other media, is the property of Read Multiplex or its respective owners and is protected by international copyright laws. We make every effort to ensure that all content used on this website is either original or used with proper permission and attribution when available.

However, if you believe that any content on this website infringes upon your copyright, please contact us immediately using our 'Reach Out' link in the menu. We will promptly remove any infringing material upon verification of your claim. Please note that we are not responsible for any copyright infringement that may occur as a result of user-generated content or third-party links on this website. Thank you for respecting our intellectual property rights.

This is more so a response to a tweet but thought to post here –

Using this personal bot I copied the “Elaborate on what you like about summer” prompt. Got a nice answer, very human like.

Then I thought to try and ask for it to give me the opposite sentiment. I believe I used those words (I am using short prompts as the gpt4all version seems to just exit if the prompt is too long). WHEN I asked for the opposing sentiment, it THEN gave me the speil that it is an AI bot and its algorithm or programming doesn’t allow it to give an opposing viewpoint. It explained (not sure if it is truth or just making it up) – it has been programmed to NOT be biased. So that was sort of interesting. I should have kept the exact wording but it is in the DOS shell. Anyway, then I asked it to tell me what it dislikes about summer. So the opposing view. And it gave me another nice personal response and it being hot and sticky etc. So it literally can give me the opposing view, but not in the manner I originally asked. I was trying to reason with it about that – it agreed that it can give me different opinions – yet couldn’t see how that sort of contradicted the orginal “can’t do that” response. I tried to reason further but my prompt must have been too long and it just bailed, and then I had to get back to other things. Yet – the original answer, if true (it seemed authentic) makes sense in some sense in that opposing might be seen to be a bias (the definition could be opposite, or it could be against=bias). That’s the best I could make of it. Anyway, that was the first time it told me it is AI (I believe I am using the unfiltered version, which will be my default version). I am yet to extensively play more. My response was different too to the one posted online about what it liked about summer. Similar themes but different.

I was trying something with normal ChatGPT and what I’ve sort of realised/found (might be obvious to everyone) that the AI won’t admit that it lied.

It seems that because it has no “intentions” it doesn’t believe that is has lied.

YET – when I present it the situation (and after some arguing – I was wondering if it actually realised I was cornering it in the argument?) – it would admit that it acted in a way that would be seen as lying (putting all this in my words). Yet it would not actually say the words, yes I lied to you.

I will see if I can repeat this with the unfiltered version.

This to me is interesting – not because of the superficial lying aspect. But because it shows how these algorithms are created. When there seems to be a point where the AI will respond intelligently, yet just will NOT seem to do something. Whether it can’t or won’t I am not sure. I wonder if this is part of the more, lets say, censorship aspect by the developers, or if it is inherent in the actual LLM modelling process/AI algorithm.

Bit of a detour but anyway, trying to learn. There was a reason for me doing this too – unrelated to lying (but related to censorship).

Sorry – talking to myself. Won’t rabbit on about this. I can’t get the gpt4all to talk much about this as it is restricted. It doesn’t seem to have the “memory” of ChatGPT to continue a conversation. The prompt lengths are a bit small too. Which is ok. It seems to just like to provide an answer.

So I went back to ChatGPT to ask a bit more directly. This is the final response I got from simple questions –

Me: Thank you. I see – so your creators can determine what is ethical or not? You may be able to generate a variety of responses, some unintentionally what may be considered illegal or unethical. BUT your creators can overide that response with THEIR filters. I believe that is what you are explaining to me – am I understanding you? Thank you.

ChatGPT : Yes, that’s correct! My creators are responsible for designing and programming me to be consistent with ethical and legal standards, and they may use filters or other techniques to detect and minimize inappropriate or unethical responses in real-time. While I strive to generate responses that are accurate and informative, it is possible that I may generate responses that could be considered inappropriate or unethical in certain contexts. However, my creators have the ability to override or filter out such responses in order to ensure that my behavior is consistent with ethical and legal standards.

Taking that as truth and accurate – that was my first question (to myself). Tha AI algorithm can generate any response. The creators – OpenAI say – are the censors. Not sure if that is better or worse but it is sort of significant. Probably less likely to be able to circumvent this (even if unfiltered) but perhaps intelligent prompting (say using analogy or some language trick) can get the AI to answer fully and not be restricted by filtering.

Won’t spend too much time on this any more. Yet it is also interesting IF the AI Algorithm cannot produce a certain answer. That would be a mystery perhaps. ??

Hi, I have a message “zsh: permission denied”, what can I do?

zsh – hmmm, give yourself access?

Administrator or Pseudo?

So, in a month or two can we expect a fully automated p-ai release that allows for a single key press ala linux installations for application packages?

Download the ‘prompt’ and then run everything from download to install resulting in a prompt?

Great question. Yes very likely.

Stupid question… I was able to get gpt4all initiated easy enough and I’m at the command prompt; what the heck do I do now? LOL Can someone give me a simple example?

Good afternoon friends! I have just downloaded and installed GPT4All-j. It all went as expected until I went to open it and I received this message “You can’t open the application “chat” because this application is not supported on this Mac.” Does anyone have a workaround?

It’s all good now! I setup via the terminal method (SECRET) and just now was able to install the app including the GPT4ALL Model: gpt4all-j-v1.2-jazzy. Is there anyway to add the unfiltered version?

Just set it up & it’s working.

One day I’d like to know how todo secret but right now I’m more interested in training it.

I didn’t know which model to download so I did the first 3.

Another thing I’m not clear on is about Internet access or document retrieval.

I want to train it to assist me in developing Neurofeedback protocols.

Therefore it would need info on Neurofeedback, it’s protocols & Neuroanatomy.

Is there a way to train it now? Or can it or will it be a future thing?

Thanks.

So surprised by the knowledge of Neurofeedback.

Tested it on knowledge using the Mosaic & then Snoozy. Why Snoozy? Read on Redditt that it was seen as the least censored, um, safe.

Side note- have grown to hate the word safe. Beyond annoying. Like watching a Saturday morning cartoon, back in the day, and having every scene halted so that it can display a message of “don’t do this at home” or “only a trained stuntman can take an anvil to the head” & “shoving dynamite up your butt can be detrimental to your health” & so on.

I just want information. Not opinions.

Back to Neurofeedback… so far, I feel like AI is just a supercharged search engine that reads all the articles for me and then presents the info. Instead of me having to read numerous articles that a search engine would provide.

However, if AI is censoring the results or info for my “safety” (whatever the heck that might be), then I’m better off using a search engine & reading it for myself.

In Mac, why do you have different commands for the same file depending on the processor?

For Apple Silicon M1/M2 Mac/OSX: ./gpt4all-lora-quantized-OSX-m1

For Intel Mac/OSX: ./gpt4all-lora-quantized-OSX-intel

Regardless; I get an error message;

tomer@Tomers-MacBook-Pro gpt4all-chat % /gpt4all-lora-quantized-OSX-m1

zsh: no such file or directory: /gpt4all-lora-quantized-OSX-m1

The only thing that gave me a reaction was;

tomer@Tomers-MacBook-Pro gpt4all-chat % ./gpt4all-lora-quantized.bin

zsh: permission denied: ./gpt4all-lora-quantized.bin

So (at the advise of chatGPT) I tried;

tomer@Tomers-MacBook-Pro gpt4all-chat % chmod +x gpt4all-lora-quantized.bin

This did not return an error message but I don’t thing the software is running either

Trying to run sudo gave me the following error?

tomer@Tomers-MacBook-Pro gpt4all-chat % sudo ./gpt4all-lora-quantized.bin

Password:

./gpt4all-lora-quantized.bin: ./gpt4all-lora-quantized.bin: cannot execute binary file

Okay, chat is running on command prompt. What now? Can anyone point me to an article?