There Is An Uncanny Amount Of Technology At Play in Animoji.

Apple, on February 19, 2017 acquired RealFace [1], an Israeli startup was a cybersecurity and machine learning firm specializing in facial recognition technology formed in 2014 for an estimated $2 million or somewhat more. Most of the technology in ARKit comes from RealFace.

On November 24, 2013, Apple purchase of PrimeSense for $360 million and it also forms the foundation technology of ARKit. Additionally apple acquired Faceshift on Nov 24, 2015, a startup based in Zurich that has developed technology to create animated avatars and other figures that capture a person’s facial expressions in real time.

The new developer CIFace

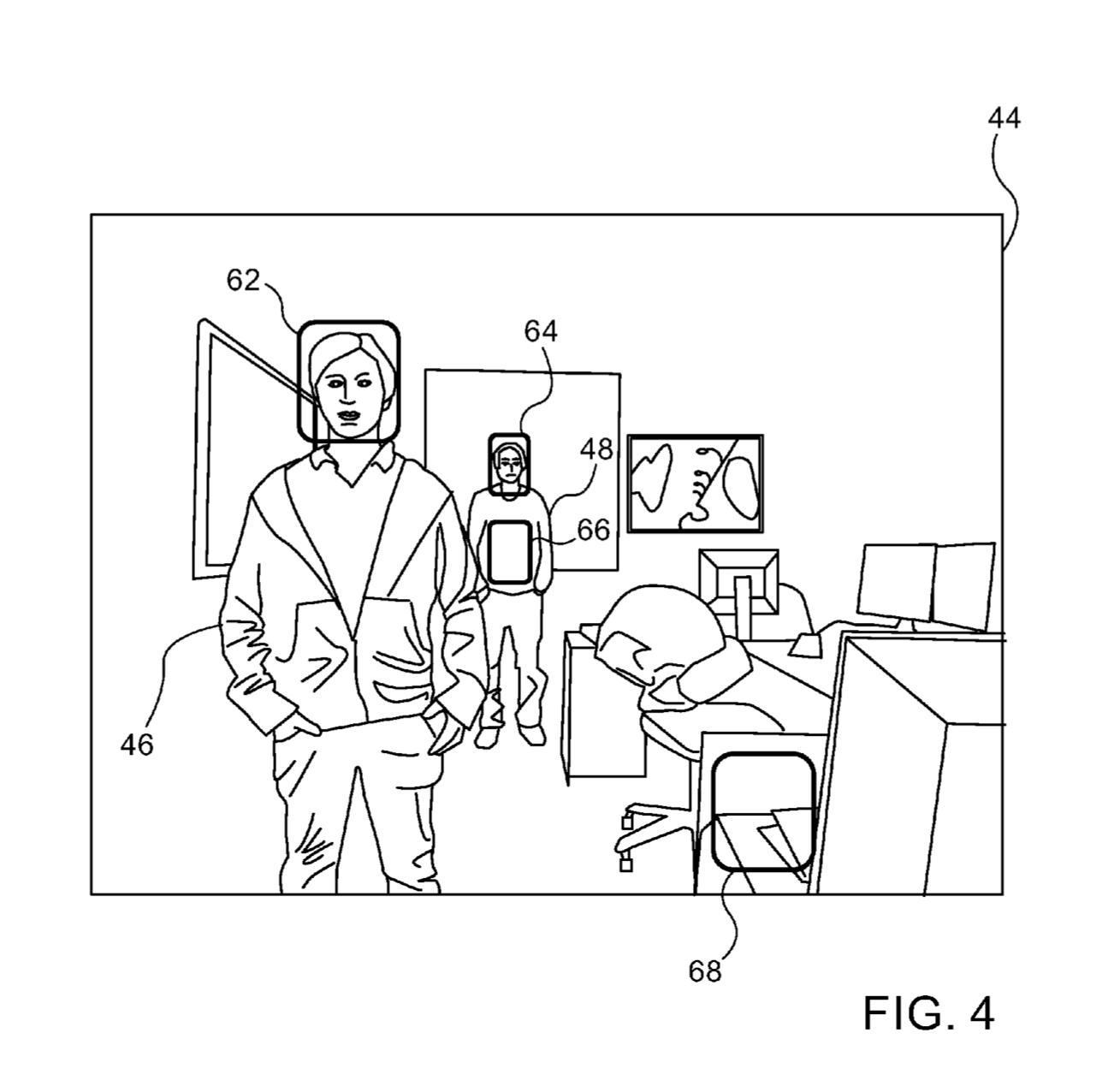

The technology from the “Enhanced face detection using depth information” patent came from PrimeSense and was originally filed in 2015. Apple is using their own CIFace

The effect of combining the technology RealFace and PrimeSense along with another Apple acquisition, Emotient [4] is quite powerful. Apple acquired the company on January 7th, 2016. Emotient algorithms make it possible to discern the most subtle expression or changes in expression and translate that into a defined emotional reaction. The system can quickly process facial detection and automated expression analysis in real-time. Their FACET (Facial Action Coding System) technology leverages machine learning in large datasets in order to achieve an accurate insight on emotions. Building around the work of Paul Ekman, Ph.D.a pioneer in the study of emotions and facial expressions, and a professor emeritus of psychology in the Department of Psychiatry at the University of California Medical School where he has been active for 32 years, Emotient used AI to machine learn his ground breaking research in micro-emotions. This system is called FACET and Emotient applied machine learning methods to high-volume datasets that were carefully constructed by a team of behavioral scientists, including Dr. Ekman, who later joined Emotient’s Advisory board.

Apple will use Emotient’s technology in a number of ways. Emotional intent extraction will be very useful for Voice First AI to discern the intent of a conversation on many levels. The first use of Emotient’s technology will be hard to see but it will be featured in the dynamically animated Emoji avatars. The faces of the Emoji seem uncanny in the way they display emotions, this is no accident. Using Paul Ekman’s work in studying the 43 muscles that control 1000s of nuances of facial expressions and emotion intent in the face of our parents. They inform a reaction to how to interpret the world, an extended sensor to help learn the basic emotions and reactions to the world around us. Apple will use this knowledge to finely match and tune the faces of the Emoji’s dynamically to match your nuanced emotional states.

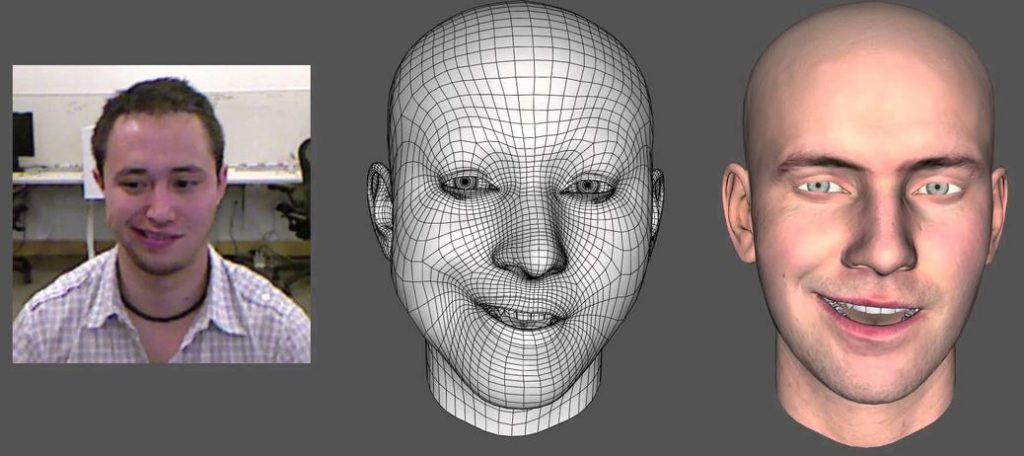

The Emotient technology will derive the FACET of the user and meticulously reconstruct the 43 muscle movements and emotional intents on to the 3 dimentional Emoji avatar. The effect is uncanny, in a number of ways. We have not seen a consumer grade system so accurately portray the subtle micro-moments that FACET technology can convey. This is beyond what is found in most high level CGI systems used by Hollywood because the underlying code is based on AI rendered Emotient technology. Prior CGI systems do not show the fluidity and range of emotions.

It is uncanny also in as much as the much talked about and somewhat inaccurate thesis of the “Uncanny Valley” [5]. The concept was identified by the robotics professor Masahiro Mori as “Bukimi no Tani Genshō”. Mori’s hypothesis is that entities approaching human appearance will necessarily be evaluated negatively and assign cartoonish features to the entities that had formerly fallen into the valley. The uncanny entities may appear anywhere in a spectrum ranging from the abstract to the perfectly human. Phenomena labeled as being in the uncanny valley can be diverse, involve different sense modalities, and have multiple, possibly overlapping causes, which can range from evolved or learned circuits for early face perception to culturally-shared psychological constructs. The cultural background of individuals will have a considerable influence on how avatars and how they are perceived with respect to the uncanny valley. Younger cohorts raised on CGI movies and games, may be less likely to be affected by this hypothesized issue.

Apple is wisely starting with cute and whimsical Emoji avatars to present this technology. However in the future anything 3 dimensional avatar or actual image can be made to beat the “Uncanny Valley” and present FACET technology to accurately portray facial expression.

The impact to the future of VR and AR will be quite deep as it will change the way we communicate and interact. The FACET technology will become an integrated into many aspect of Apple’s AI systems and ultimate will form the basis of a true Personal Assistant that can determine your intent more correctly and perhaps more profoundly through your facial expressions and your true emotions.

_____

[1] Why did apple acquire facial recognition company RealFace? [2] https://developer.apple.com/documentation/coreimage/cifacefeature [3] Apple Patent [5] Uncanny_valley

~—~

~—~

Subscribe ($99) or donate by Bitcoin.

Copy address: bc1q9dsdl4auaj80sduaex3vha880cxjzgavwut5l2

Send your receipt to Love@ReadMultiplex.com to confirm subscription.

IMPORTANT: Any reproduction, copying, or redistribution, in whole or in part, is prohibited without written permission from the publisher. Information contained herein is obtained from sources believed to be reliable, but its accuracy cannot be guaranteed. We are not financial advisors, nor do we give personalized financial advice. The opinions expressed herein are those of the publisher and are subject to change without notice. It may become outdated, and there is no obligation to update any such information. Recommendations should be made only after consulting with your advisor and only after reviewing the prospectus or financial statements of any company in question. You shouldn’t make any decision based solely on what you read here. Postings here are intended for informational purposes only. The information provided here is not intended to be a substitute for professional medical advice, diagnosis, or treatment. Always seek the advice of your physician or other qualified healthcare provider with any questions you may have regarding a medical condition. Information here does not endorse any specific tests, products, procedures, opinions, or other information that may be mentioned on this site. Reliance on any information provided, employees, others appearing on this site at the invitation of this site, or other visitors to this site is solely at your own risk.

Copyright Notice:

All content on this website, including text, images, graphics, and other media, is the property of Read Multiplex or its respective owners and is protected by international copyright laws. We make every effort to ensure that all content used on this website is either original or used with proper permission and attribution when available.

However, if you believe that any content on this website infringes upon your copyright, please contact us immediately using our 'Reach Out' link in the menu. We will promptly remove any infringing material upon verification of your claim. Please note that we are not responsible for any copyright infringement that may occur as a result of user-generated content or third-party links on this website. Thank you for respecting our intellectual property rights.